Chapter 3 Linear regression

Preliminary code

This code is needed to make other code below work:

library(gridExtra) # for grid.arrange() to print plots side-by-side

require(languageR)

library(dplyr)

library(ggplot2)

library(arm)

## loads alternativesMcGillLing620.csv from OSF project for Wagner (2016) data

alt <- read.csv(url("https://osf.io/6qctp/download"))

## loads french_medial_vowel_devoicing.txt from OSF project for Torreira & Ernestus (2010) data

df <- read.delim(url("https://osf.io/uncd8/download"))

## loads halfrhymeMcGillLing620.csv from OSF project for Harder (2013) data

halfrhyme <- read.csv(url("https://osf.io/37uqt/download"))Note: Most answers to questions/exercises not listed in text are in Solutions.

This chapter introduces linear regression. The broad topics we will cover are:

Regression: general introduction

Simple linear regression

Multiple linear regression

Linear regression assumptions, model criticism, and interpretation

Model comparison

3.1 Regression: General introduction

First, what is “regression”? Chatterjee & Hadi (2012) define it as “a conceptually simple method for investigating functional relationships among variables”:

The variable to be explained is called the response, often written \(Y\)

The explanatory variables are called predictors, often written \(X_1, X_2, \ldots\).

Here is an example of a study that uses regression analysis:

Questions:

What is the response variable in this study?

What could be a predictor variable?

3.1.1 Linear models

The relationship between variables is captured by a regression model: \[\begin{equation*} Y = f(X_1, X_2, ..., X_p) + \epsilon \end{equation*}\]In this model, \(Y\) is approximated by a function of the predictors, and the difference between the model and reality is called the error (\(\epsilon\)).

Throughout this book we will be dealing exclusively with linear models (including “generalized linear models”, like logistic regression), where the model takes the form:

\[\begin{equation} Y = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \cdots + \beta_p X_p + \epsilon \tag{3.1} \end{equation}\]The \(\beta\)’s are called regression coefficients.

This turns out to be an extremely general class of model, which can be applied to a wide range of phenomena. Some important kinds of models that don’t appear at first glance to fit Equation (3.1) can be linearized into this form.

3.1.2 Terminology

We will consider two broad types of regression in this book:

linear regression, where the response (\(Y\)) is a continuous variable. An example would be modeling reaction time (

RTlexdec) as a function of word frequency (WrittenFrequency) for theenglishdataset.Later we will consider logistic regression, where the response (\(Y\)) is binary: 0 or 1. An example would be modeling whether tapping occurs or not (

tapping) as a function ofvowelDurationandspeakingRatein thetappingdataset.

Regression with just one predictor is called simple, while regression with multiple predictors is called multiple. The two examples just given would be “simple linear regression” and “multiple logistic regression”.

Predictors can be continuous, such as milk consumption, or categorical—also called “factors”—such as participant gender, or word type.

Certain special cases of linear models that are common go by names such as:

Analysis of variance: continuous \(Y\), categorical predictors. (Models variability between groups)

Analysis of covariance: continuous \(Y\), mix of categorical and continuous predictors.

We are not covering these cases in this book, but ANOVAs (and ANCOVAs) are widely used in language research, and you may have seen them before. ANOVAs can be usefully thought of as just a special case of regression, as discussed in Vasishth & Broe (2011), R. Levy (2012), Gelman & Hill (2007). Once you understand linear regression well, understanding ANOVA analyses is relatively straightforward.

3.1.3 Steps and assumptions of regression analysis

Regression analyses have five broad steps, as usefully discussed by Chatterjee & Hadi (2012):

- Statement of the problem

- Ex: Does milk consumption affect height gain?

- Selection of potentially relevant variables

- Ex: Height gain, daily milk consumption, age, and sex.

- Data collection

- Ex: data from an existing database.

- Model specification & fitting

- Ex: height gain = \(\beta_0 + \beta_1 \cdot\) milk consumption \(+ \beta_2 \cdot\) sex \(+\) error

- Model validation and criticism

It is important to remember that the validity of a regression analysis depends on the assumptions of the data and model.

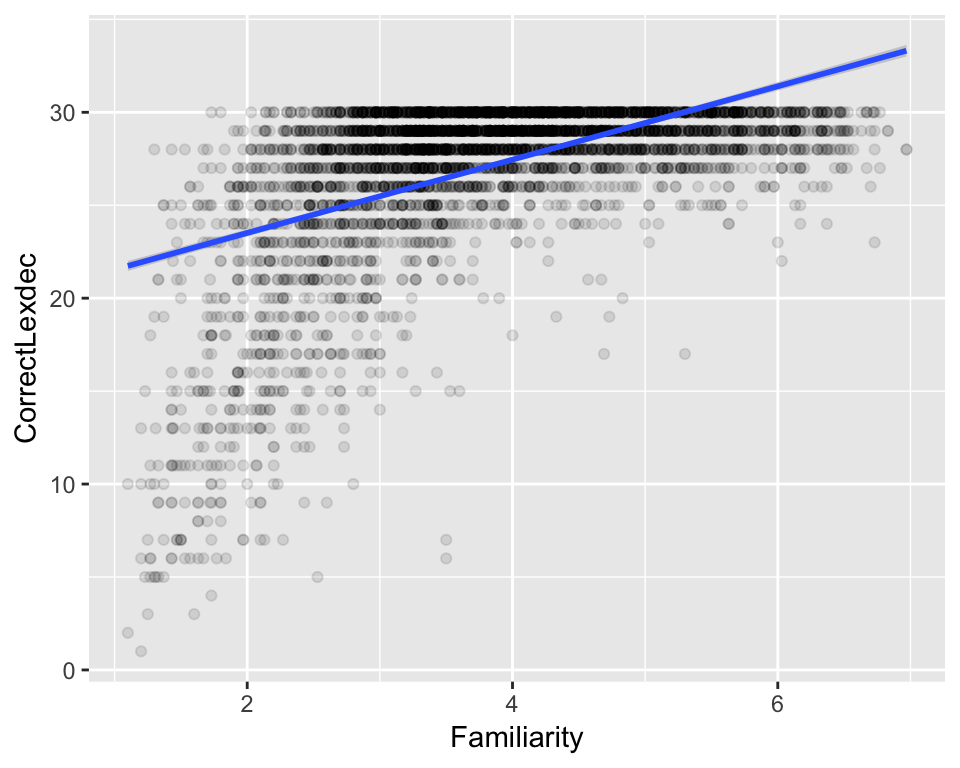

For example, if you are modeling data where \(Y\) has a maximum value, fitting a simple linear regression (= a line) to this data doesn’t make conceptual sense, a priori. Here’s an example using the english dataset:

library(languageR) ## makes the 'english' dataset available

ggplot(aes(x = Familiarity, y = CorrectLexdec), data = english) + geom_point(, alpha=0.1) + geom_smooth( method='lm')

Because CorrectLexdec has a maximum value of 30, fitting a line doesn’t make sense—the predicted value when Familiarity=6 is above 30, but this is impossible given the definition of CorrectLexdec.

For now, we will not go into what the assumptions of linear regression are, and just assume that they are met. After introducing simple and multiple linear regressions, we’ll come back to this issue in Section 3.4:

What are the assumptions (of linear regression)?

For each assumption, how do we determine whether it’s valid?

How much of a problem is it, and what can be done, if the assumption is not met?

Note that R almost never checks whether your data and model meet regression analysis assumptions, unlike other software (e.g. SPSS, sometimes).

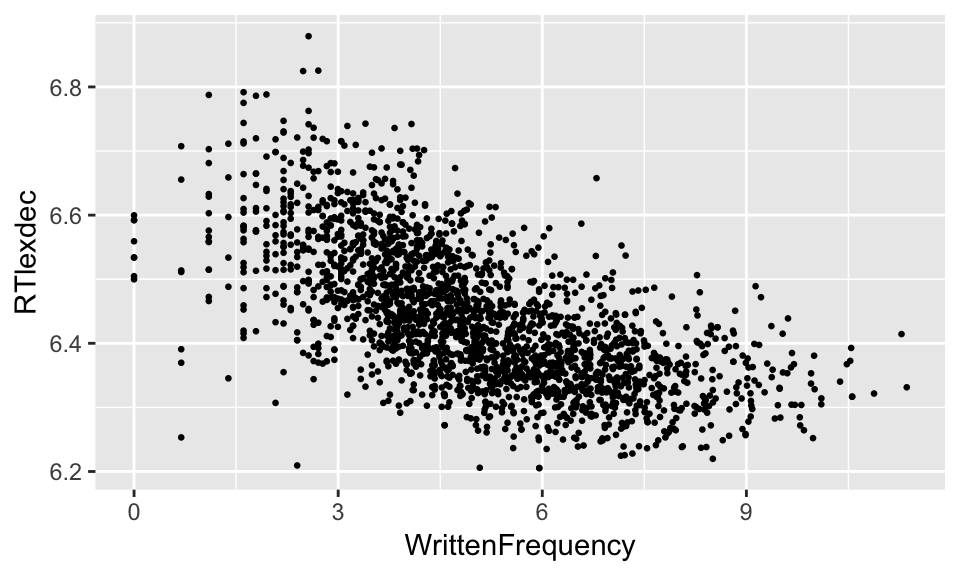

3.2 Simple linear regression

The simplest application of simple linear regression (SLR) is to model an association between two continuous variables.

Example: english data, young participants only

\(X\):

WrittenFrequency(= predictor)\(Y\):

RTlexdec(= response)

young <- filter(english, AgeSubject=='young')

ggplot(young, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.5)

One way to describe this kind of association which you may be familiar with is a correlation coefficient which gives us two types of information about the relationship:

- direction: \(r = -0.434\)

- \(\implies\) negative relationship

- strength: \(r^2 = 0.189\)

- \(\implies\) weak relationship (\(0 \le r^2 \le 1\))

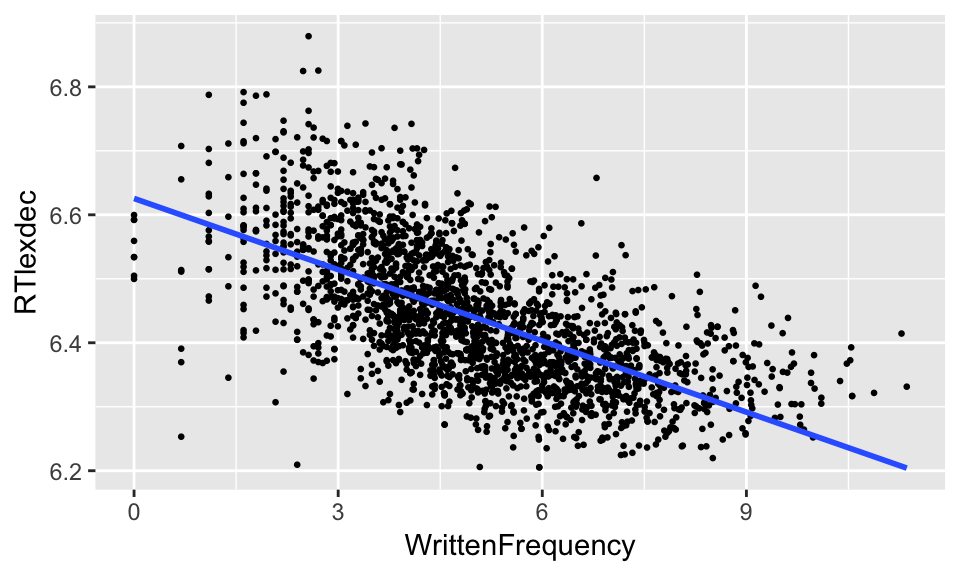

A simple linear regression gives a line of best fit:

ggplot(young, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.5) +

geom_smooth(method="lm", se=F)

which gives some information not captured by a correlation coefficient:

Prediction of \(Y\) for a given \(X\)

Numerical description of relationship

Regression gives both types of information, and more.

3.2.1 SLR: Continuous predictor

The formula for simple linear regression, written for the \(i^{\text{th}}\) observation, is:

\[\begin{equation} y_i = \underbrace{\beta_0}_{\text{intercept}} + \underbrace{\beta_1}_{\text{slope}} x_i + \epsilon_i \tag{3.2} \end{equation}\]In this expression:

\(\beta_0, \beta_1\) are coefficients

- For the \(i^{\text{th}}\) observation

\(x_i\) is the value of the predictor

\(y_i\) is the value of the response

\(\epsilon_i\) is the value of the error (or residual)

This is our first linear model of a random variable (\(Y\)) as a function of a predictor variable (\(X\)). The actual model, written not for individual observations, is written:

\[ Y = \beta_0 + \beta_1 X + \epsilon \]

That is, we use notation like \(X\) for a variable, and notation like \(x_5\) for actual values that it takes on.

For example, for the english dataset, with \(Y\) = RTlexdec and \(X\) = WrittenFrequency:

head(dplyr::select(english, WrittenFrequency, RTlexdec))## WrittenFrequency RTlexdec

## 1 3.912023 6.543754

## 2 4.521789 6.397596

## 3 6.505784 6.304942

## 4 5.017280 6.424221

## 5 4.890349 6.450597

## 6 4.770685 6.531970\(x_2\)=4.5217886 (predictor value for second observation)

\(y_1\)=6.5437536 (response value for first observation)

Question

- What is \(y_5\)?

3.2.2 SLR: Parameter estimation

To get a line of best fit, we want: \(\beta_0\) and \(\beta_1\), the population values. Recall that we can’t actually observe these (Sec.1.1), so we obtain sample estimates, written \(\hat{\beta}_0, \hat{\beta}_1\).

Once sample estimates are specified, Equation (3.2) gives fitted values for each observation, written \(\hat{y}_i\): \[ \hat{y}_i = \hat{\beta}_0 + \hat{\beta}_1 x_i \]

Note that there are no residuals in this equation—\(\epsilon_i\) are again population values, which we can’t observe. Our estimates of the residuals, given an estimated line of best it, are written \(e_i\) (error): \[ e_i = y_i - \hat{y}_i \]

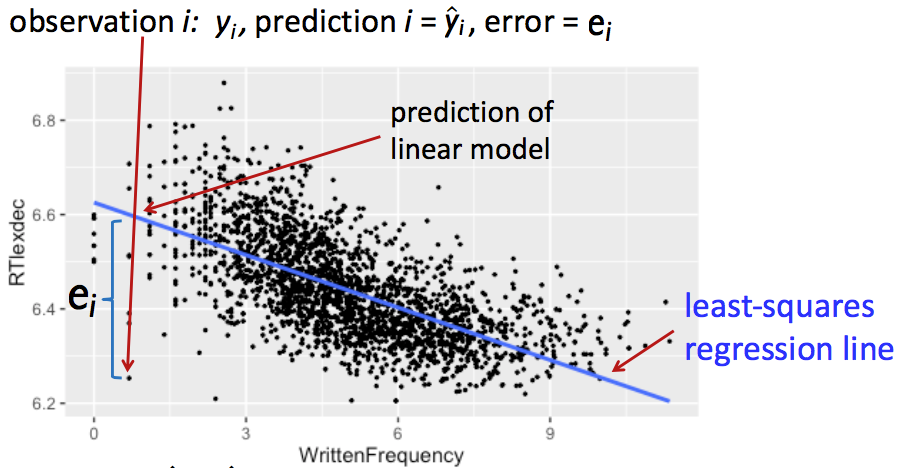

This diagram shows the relationship between some of these quantities, for a single observation:

Our goal is to find coefficient values that minimize the difference between observed and expected values—the magnitudes of error (\(|e_i|\)), which are minimized by minimizing the squared errors (\(e_i^2\)).

We choose \(\hat{\beta}_0\), \(\hat{\beta}_1\) that minimize the sum of the \(e_i^2\); these are called the least-squares estimates.

One useful property of the resulting regression line is that it always passes through the point (mean(\(X\)), mean(\(Y\))). A consequence is that SLR is easily thrown off by observations which are outliers in \(X\) or \(Y\) (why?).

Example

Here is an example of fitting an SLR model in R, of reaction time vs. frequency for young speakers in the english dataset:

young <- filter(english, AgeSubject == "young")

m <- lm(RTlexdec ~ WrittenFrequency, young)The model output is:

m##

## Call:

## lm(formula = RTlexdec ~ WrittenFrequency, data = young)

##

## Coefficients:

## (Intercept) WrittenFrequency

## 6.62556 -0.03711The interpretation of the two coefficients is:

\(\beta_0\): the predicted \(Y\) value when \(X = 0\)

m$coefficients[1]## (Intercept) ## 6.625556\(\beta_1\): predicted change in \(Y\) for every unit change in \(X\)

m$coefficients[2]## WrittenFrequency ## -0.03710692

Questions:

What is the predicted

RTlexdecwhen:

WrittenFrequency= 5?

WrittenFrequency= 10?

3.2.3 Hypothesis testing

The least-squared estimators are normally-distributed. These are sample estimates, so we can also approximate the standard errors of the estimators: \[\begin{equation*} SE(\hat{\beta}_0) \quad SE(\hat{\beta}_1) \end{equation*}\]and apply \(t\)-tests to test for significance and obtain confidence intervals (CI) for the coefficients.

In particular, we are testing the null hypotheses of no relationship:

- \(H_0~:~\beta_1 = 0\)

(and similarly for \(\beta_0\)).

We then apply a \(t\)-test, using test statistic: \[\begin{align} t_1 &= \frac{\hat{\beta}_1}{SE(\hat{\beta}_1)} \\ df &= n - 2 \nonumber \end{align}\](where \(n\) = number of observations).

The resulting \(p\)-value tells us how surprised are we to get a slope this far from zero (\(\beta_1\)) under \(H_0\). (With this high a standard error, given this much data.)

To see the results of these hypothesis tests in R:

summary(lm(RTlexdec~WrittenFrequency, young))##

## Call:

## lm(formula = RTlexdec ~ WrittenFrequency, data = young)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.34664 -0.05523 -0.00546 0.05167 0.34877

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.6255559 0.0049432 1340.34 <2e-16 ***

## WrittenFrequency -0.0371069 0.0009242 -40.15 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.08142 on 2282 degrees of freedom

## Multiple R-squared: 0.414, Adjusted R-squared: 0.4137

## F-statistic: 1612 on 1 and 2282 DF, p-value: < 2.2e-16The \(t\)-values and associated \(p\)-values are in the Coefficients: table, where the first row is for \(\hat{\beta}_0\) and the second row is for \(\hat{\beta}_1\).

Questions:

- Why does the column for the \(p\)-value say

Pr(>|t|)?

Having the SEs of the coefficients also lets us compute 95% confidence intervals for the least-squared estimators. Going from the null hypothesis (\(H_0\)) above: if the 95% CI of the slope (\(\beta_1\)) does not include 0, we can reject \(H_0\) with \(\alpha = 0.05\).

In R, you can get confidence intervals for a fitted model as follows:

confint(lm(RTlexdec~WrittenFrequency, young))## 2.5 % 97.5 %

## (Intercept) 6.61586227 6.63524948

## WrittenFrequency -0.03891921 -0.03529463Neither CI includes zero, consistent with the very low \(p\)-values in the model table above.

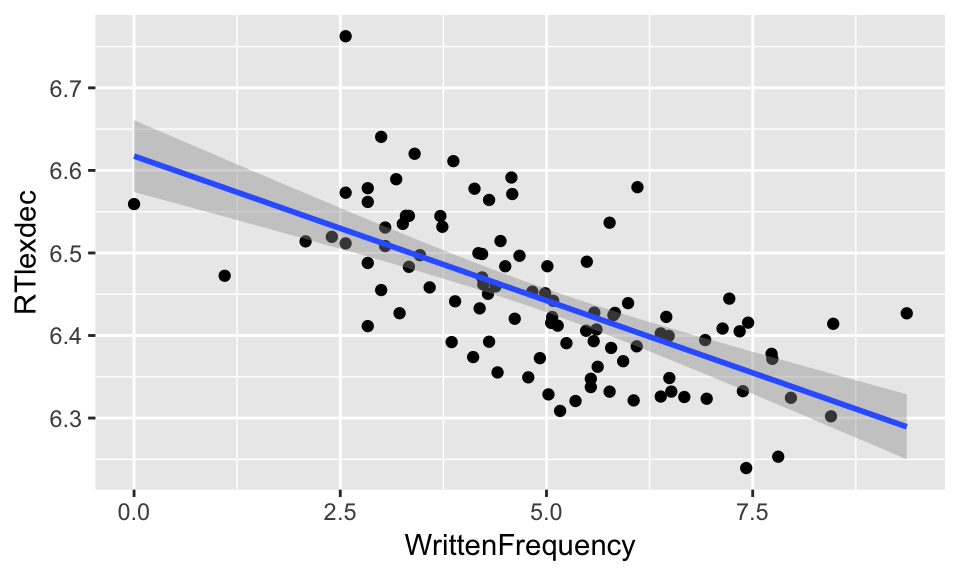

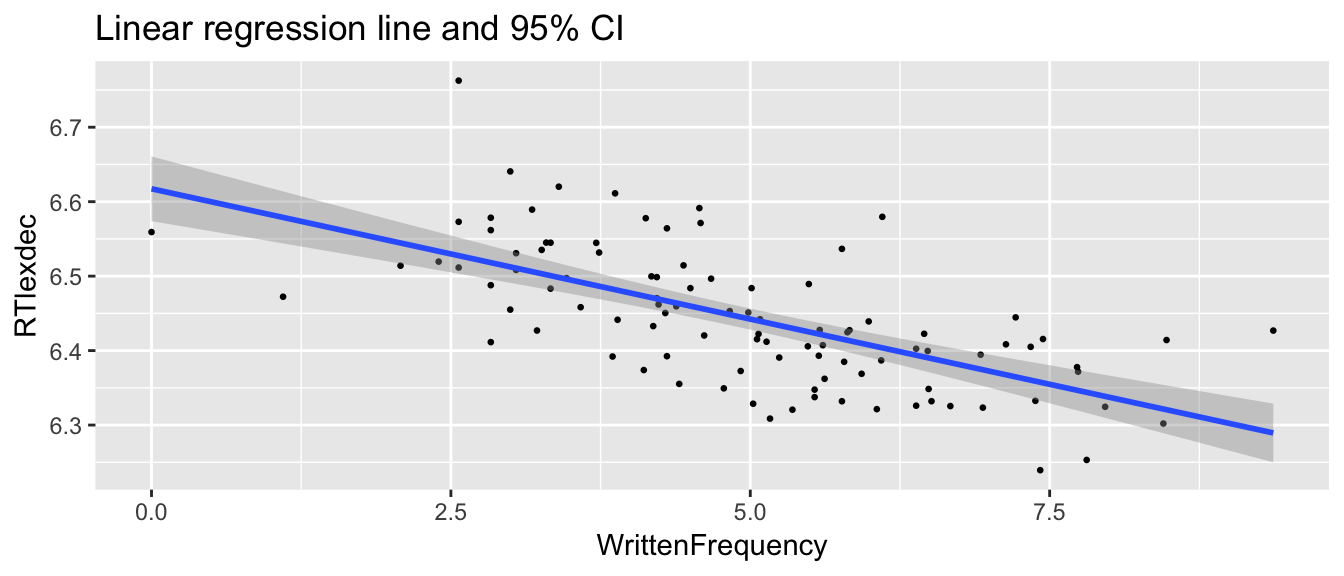

Example: Small subset

For the full dataset of english young speakers, it’s a little silly to do hypothesis testing given how much data there is and the clarity of the pattern—the line of best fit has a tiny confidence interval. Just for exposition, let’s look at the line of best fit for a subset of just \(n=100\) points:

set.seed(2903) # This makes the following "random" sampling step always give the same resuult

young_sample <- young %>% sample_n(100)

ggplot(young_sample, aes(WrittenFrequency, RTlexdec)) +

geom_point() +

geom_smooth(method="lm")

Questions:

- What does

geom_smooth(method="lm")do?

In this plot, the shading around the line is the 95% confidence interval. “Can we reject \(H_0\)?” is equivalent to asking, “Can a line with 0 slope cross the shaded area through the range of \(x\) (and going through (mean(\(X\)), mean(\(Y\)))?

3.2.4 Quality of fit

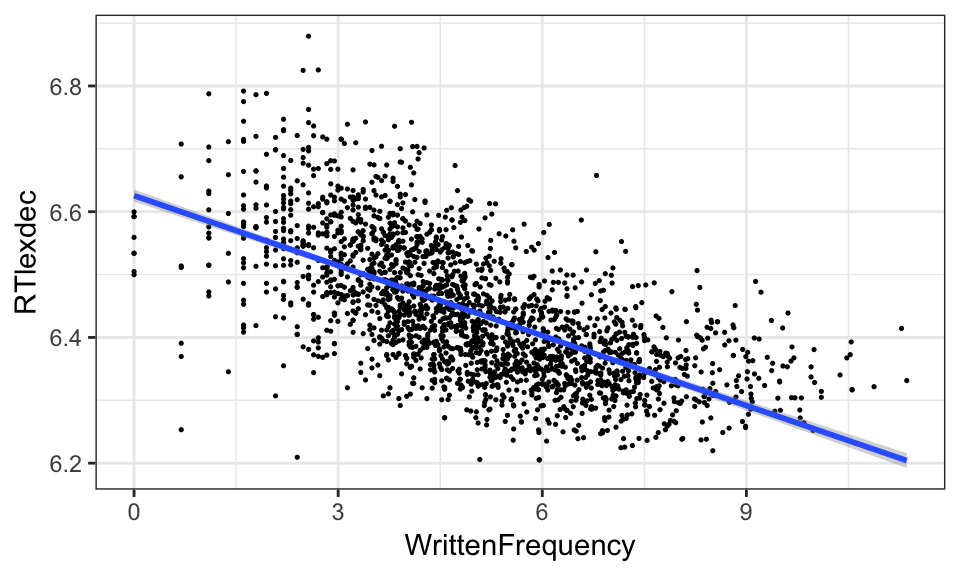

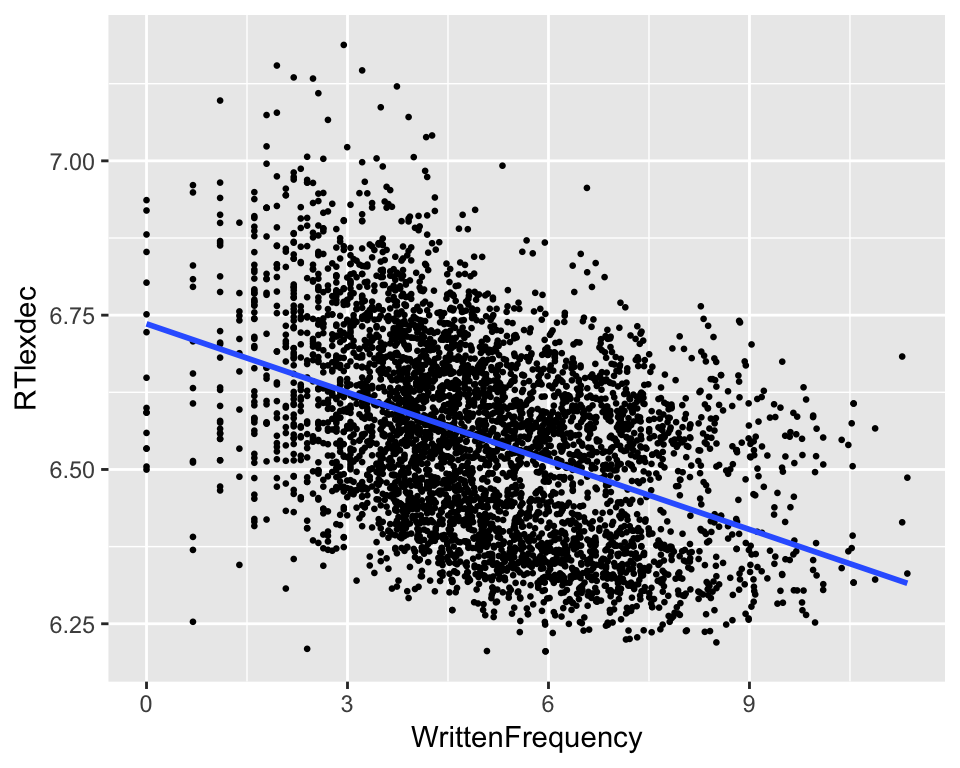

Here is the model we have been discussing, plotted on top of the empirical data:

young <- filter(english, AgeSubject == "young")

ggplot(young, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.25) +

geom_smooth(method="lm") +

theme_bw()

We often want a metric quantifying how well a model fits the data—the goodness of fit.

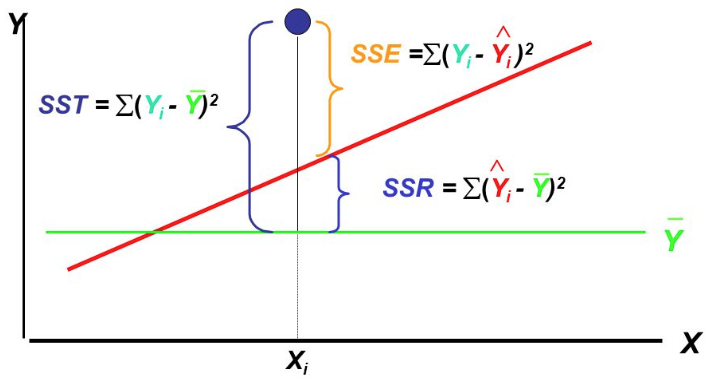

For simple linear regression, we can derive such a metric by first defining three quantities:

SST: Total sum of squares

SSR: Sum of squares due to regression

SSE: Sum of squares due to error

(Source: slides from Business Statistics: A First Course (Third edition))

The fundamental equality is: SST = SSR + SSE

Intuitively we want a measure of how much of SST is accounted for by SSR. This is \(R^2\): the proportion of total variability in \(Y\) accounted for by \(X\): \[\begin{equation} R^2 = \frac{SS_{\text{fit}}}{SS_{\text{total}}} \end{equation}\]\(R^2\) always lies between 0 and 1, which can conceptually be thought of as:

- 0: none of the variance in \(Y\) is accounted for by \(X\)

- 1: all of the variance `` ``

It turns out \(R^2\) is also the square of (Pearson’s) correlation, \(r\).

Example

Here is the SLR model fitted to a subset of 100 data points, repeated for convenience:

set.seed(2903)

d <- english %>% filter(AgeSubject=="young") %>% sample_n(100)

summary(lm(RTlexdec~WrittenFrequency, d))##

## Call:

## lm(formula = RTlexdec ~ WrittenFrequency, data = d)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.127857 -0.045072 -0.005826 0.042917 0.235034

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.61733 0.02190 302.236 < 2e-16 ***

## WrittenFrequency -0.03501 0.00419 -8.354 4.43e-13 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.07121 on 98 degrees of freedom

## Multiple R-squared: 0.4159, Adjusted R-squared: 0.41

## F-statistic: 69.79 on 1 and 98 DF, p-value: 4.43e-13In the model table, note the values of the sample statistic under t value and its significance in the Pr(>|t|) column in the WrittenFrequency row, as well as of the correlation statistic Multiple R-squared.

This is a hypothesis test for Pearson’s \(r\) for the same data, checking whether it is significantly different from 0:

cor.test(d$WrittenFrequency, d$RTlexdec)##

## Pearson's product-moment correlation

##

## data: d$WrittenFrequency and d$RTlexdec

## t = -8.354, df = 98, p-value = 4.43e-13

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.7467533 -0.5135699

## sample estimates:

## cor

## -0.644931Note that \(t\), \(p\), and \(r\) (the square root of Multiple R-squared) are exactly the same. Thus, fitting a simple linear regression and conducting a correlation test give us two ways of finding the same information.

3.2.5 Categorical predictor

Simple linear regression easily extends to the case of a binary \(X\) (a factor).

Example

englishdataPredictor:

AgeSubjectResponse:

RTlexdec

Everything is the same as for the case where \(X\) is continuous, except now we have:

\(x_i = 0\): if

AgeSubject == "old"\(x_i = 1\): if

AgeSubject == "young"

The regression equation is exactly the same as Equation (3.2):

\[ y_i = \beta_0 + \beta_1 x_i + \epsilon_i \]

Only the interpretation of the coefficients differs:

\(\beta_0\): mean

RTlexdecwhenAgeSubject == "old"(since \(x_i = 0 \iff\)AgeSubject == "old")\(\beta_1\): difference in mean

RTlexdecbetweenyoungandold

Hypothesis tests, \(p\)-values, CIs, and goodness of fit work exactly the same as for a continuous predictor.

Example

Suppose we want to test whether the difference in group means is statistically significantly different from 0:

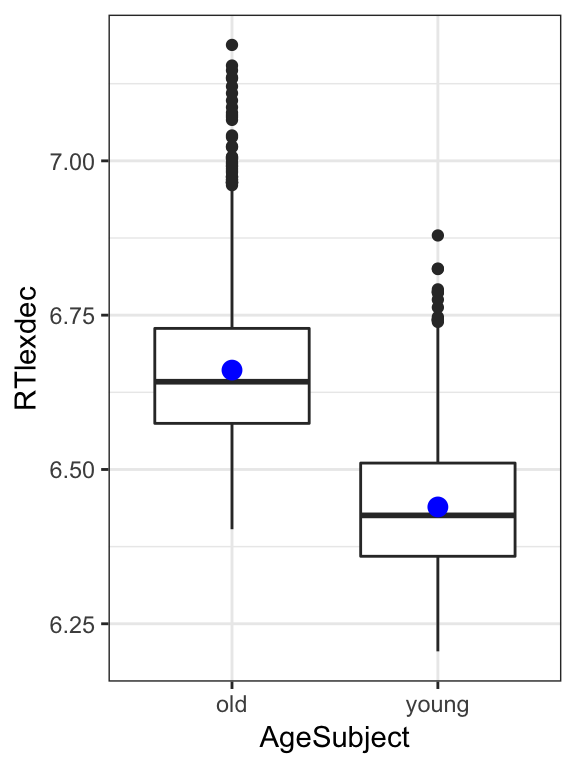

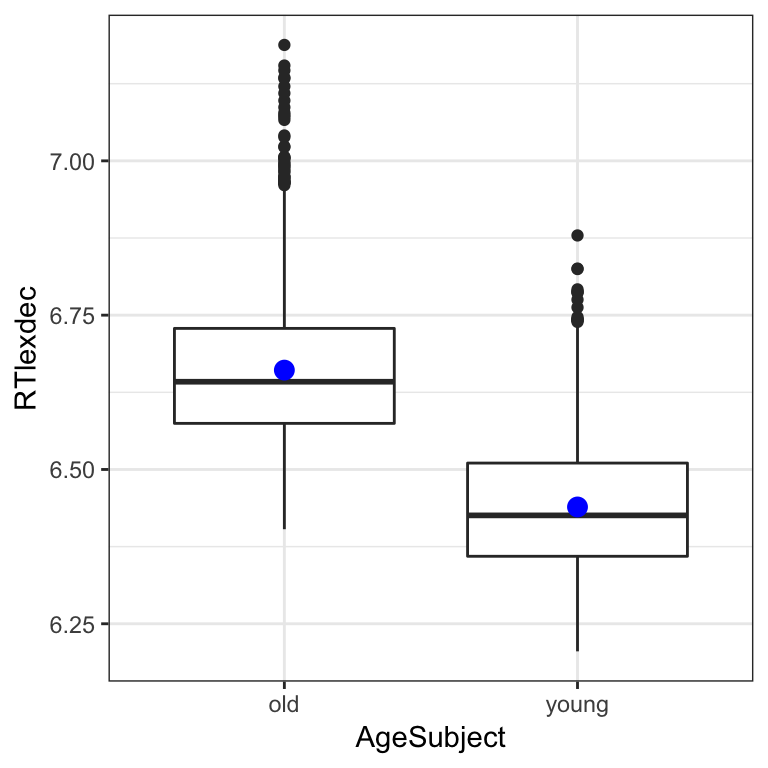

english %>% ggplot(aes(AgeSubject, RTlexdec)) +

geom_boxplot() +

stat_summary(fun.y="mean", geom="point", color="blue", size=3) +

theme_bw()

Question:

- What goes in the blanks?

m1 <- lm(_____ ~ _____, english)

summary(m1)Note the \(t\) and \(p\)-values for AgeSubjectyoung, we’ll need them in a second.

3.2.6 SLR with a binary categorical predictor vs. two-sample \(t\)-test

Conceptually, we just did the same thing as a two-sample \(t\)-test—tested the difference between two groups in the value of a continuous variable. Let’s see what the equivalent \(t\)-test gives us:

t.test(RTlexdec ~ AgeSubject, english, var.equal=T)##

## Two Sample t-test

##

## data: RTlexdec by AgeSubject

## t = 67.468, df = 4566, p-value < 2.2e-16

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## 0.2152787 0.2281642

## sample estimates:

## mean in group old mean in group young

## 6.660958 6.439237(The var.equal option forces the \(t\)-test to assume equal variances in both groups, which is one assumption of linear regression.)

You should find that both tests give identical \(t\) and \(p\)-values. So, a \(t\)-test can be thought of as a special case of simple linear regression.

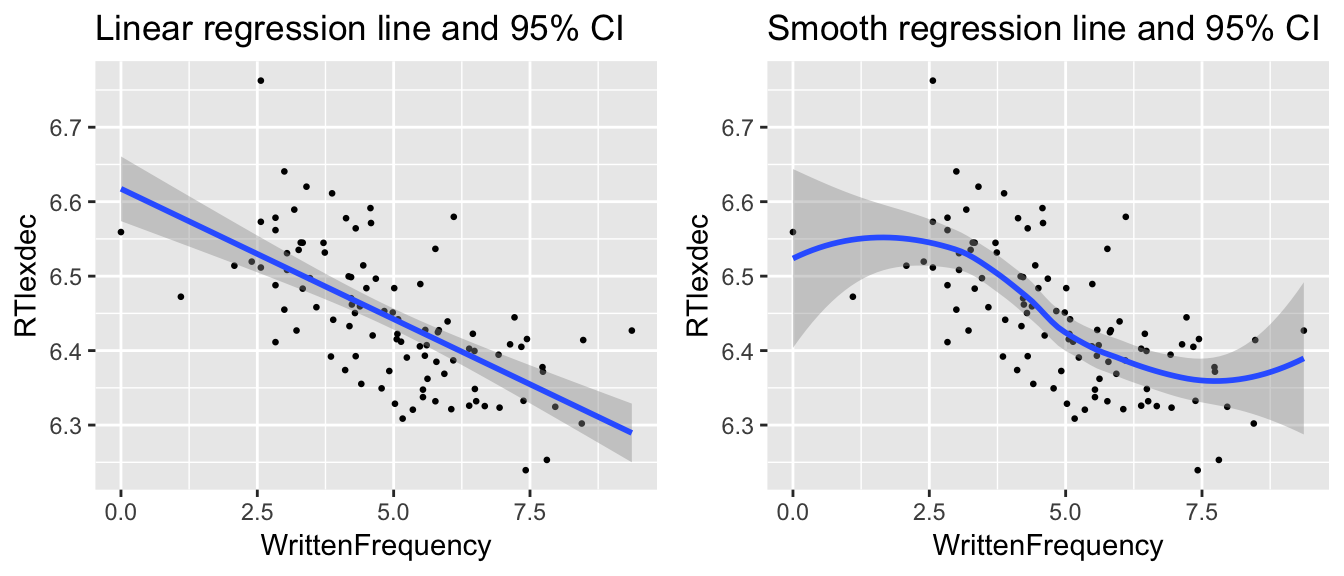

3.2.6.1 Bonus: Linear vs. smooth regression lines

We have forced an SLR fit in the plots above using the method='lm' flag, but by default geom_smooth uses a nonparametric smoother (such as LOESS, the geom_smooth default for small samples):

young <- filter(english, AgeSubject=='young')

set.seed(2903)

young_sample <- young %>% sample_n(100)

day7_plt1 <- ggplot(young_sample, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.5) +

geom_smooth(method="lm") +

ggtitle("Linear regression line and 95% CI")

day7_plt2 <- ggplot(young_sample, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.5) +

geom_smooth() +

ggtitle("Smooth regression line and 95% CI")

grid.arrange(day7_plt1, day7_plt2, ncol = 2)

Note the differences in the two plots:

Linear/nonlinear

CI widths related to distance from mean, versus amount of data nearby

(Hence “Local” in LOESS.)

3.3 Multiple linear regression

In multiple linear regression, we use a linear model to predict a continuous response with \(p\) predictors (\(p>1\)):

\[ Y = \beta_0 + \beta_1 X_i + \beta_2 X_2 + \cdots + \beta_p X_p + \epsilon \]

Each predictor \(X_i\) can be continuous or categorical.

Example: RT ~ frequency + age

For the english dataset, let’s model reaction time as a function of word frequency and participant age. Recall that in addition to the word frequency effect, older speakers react more slowly than younger speakers:

english %>% ggplot(aes(AgeSubject, RTlexdec)) +

geom_boxplot() +

stat_summary(fun.y="mean", geom="point", color="blue", size=3) +

theme_bw()

The response and predictors are:

\(Y\):

RTlexdec\(X_1\):

WrittenFrequency(continuous)\(X_2\):

AgeSubject(categorical) (0:old, 1:young)

Because \(p=2\), the regression equation for observation \(i\) is

\[

y_i = \beta_0 + \beta_1 x_{i1} + \beta_2 x_{i2} + \epsilon_i

\] where \(x_{ij}\) means the value of the \(j^{\text{th}}\) predictor for the \(i^{\text{th}}\) observation.

To fit this model in R:

m2 <- lm(RTlexdec~WrittenFrequency+AgeSubject, english)Summary of the model:

summary(m2)##

## Call:

## lm(formula = RTlexdec ~ WrittenFrequency + AgeSubject, data = english)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.34622 -0.06029 -0.00722 0.05178 0.44999

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.8467921 0.0039792 1720.64 <2e-16 ***

## WrittenFrequency -0.0370103 0.0007033 -52.62 <2e-16 ***

## AgeSubjectyoung -0.2217215 0.0025930 -85.51 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.08763 on 4565 degrees of freedom

## Multiple R-squared: 0.6883, Adjusted R-squared: 0.6882

## F-statistic: 5040 on 2 and 4565 DF, p-value: < 2.2e-16This model tells us that the least-squares solution for the regression line is: \[ \texttt{RTlexdec} = \underbrace{6.846}_{\beta_0} + \underbrace{(- 0.037)}_{\beta_1} \cdot \texttt{WrittenFrequency} + \underbrace{(- 0.221)}_{\beta_2} \cdot \texttt{AgeSubject} + \text{error} \]

Questions:

- What RT does the model predict for an observation with

WrittenFrequency=5 andAgeSubject=‘old’?

6.846 + (-0.037 * 5) + (-0.221 * 0)## [1] 6.661Note that in this MLR model, the interpretation of each coefficient is:

\(\beta_0\): predicted value when all predictors = 0

\(\beta_1\), \(\beta_2\): change in a predictor when others are held constant

For example, the difference between old and young speakers in RT is 0.221, when word frequency is held constant.

3.3.1 Goodness of fit metrics

With \(R^2\) defined as for simple linear regression, in terms of sums of squares, the exact same measure works to quantify goodness of fit of a multiple linear regression: \[ R^2 = \frac{SS_{\text{fit}}}{SS_{\text{total}}} \] sometimes called multiple \(R^2\).

An alternative to \(R^2\) when there’s more than one predictor (MLR) is adjusted \(R^2\), defined as: \[ R^2 = 1 - \frac{SS_{\text{fit}}/(n - p - 1)}{ SS_{\text{total}}/(n - 1) } \] where \(p\) is the number of predictors and \(n\) is the number of observations.

In this expression, the sum-of-squares term can be thought of as a ratio comparing the amount of variance explained by two models: the “full” model (the one with \(p\) predictors) and the “baseline” model (the one with just the intercept). Each model’s sum of squares is scaled by its degrees of freedom; intuitively, this gives a measure of how much variance is explained given the number of predictors. (We expect that if you throw more predictors in a model, more variance can be explained, just by chance.)

The adjusted \(R^2\) measure only increases if the \(p\) additional predictors improve the model more than would be expected by chance.

Pro: Adjusted \(R^2\) is more appropriate as a metric for comparing different possible model—unlike “multiple \(R^2\)”, adjusted \(R^2\) doesn’t automatically increase whenever new predictors are added.

Con: Multiple \(R^2\) is no longer interpretable as “fraction of the variation accounted for by the model”.

R reports both adjusted and non-adjusted versions, as seen in the model summary above.

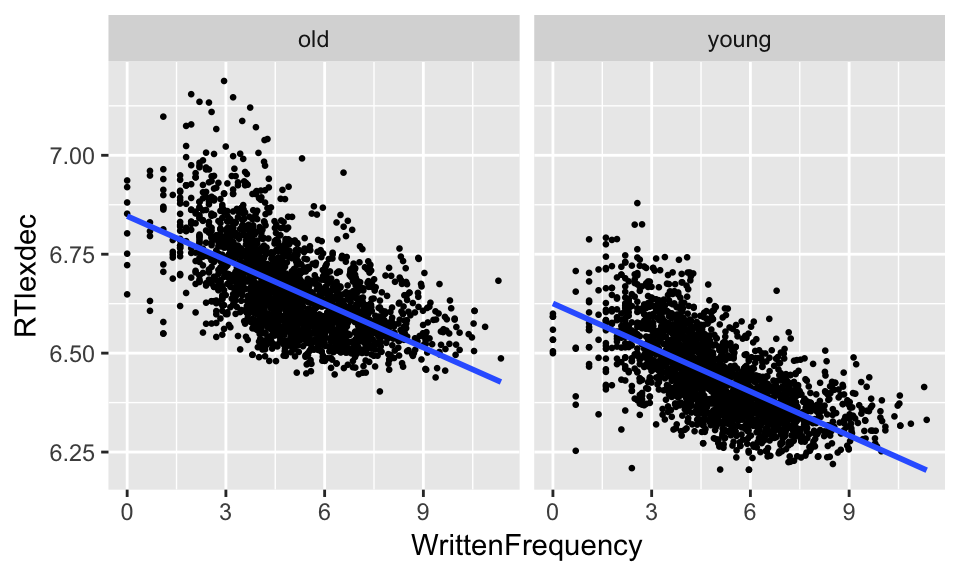

3.3.2 Interactions and factors

The models we have considered so far assume that each predictor affects the response independently. For example, in the example above (RT ~ frequency + age), our model assumes that the slope of the frequency effect on RT is the same for old speakers as for young speakers. This looks like it might be approximately true:

ggplot(english, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.5) +

geom_smooth(method="lm", se=F) +

facet_wrap(~AgeSubject) in that there seems to be a similarly negative slope for both groups. That is, a model of this form seems approximately correct: \[

Y = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \epsilon

\] (\(\epsilon\) = error).

in that there seems to be a similarly negative slope for both groups. That is, a model of this form seems approximately correct: \[

Y = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \epsilon

\] (\(\epsilon\) = error).

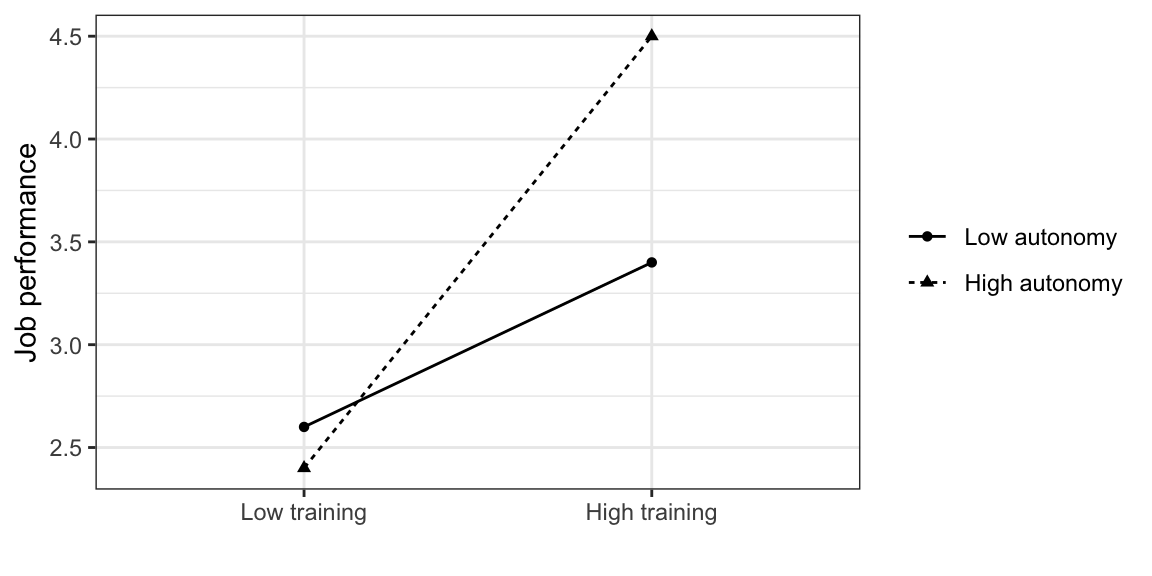

Here is a (fake) example where the independence assumption is definitely not true:

\(Y\): Job performance (continuous)

\(X1\): Training (categorical)

\(X2\): Autonomy (categorical)

The effect of training on job performance is larger for high-autonomy participants. In this case, we say there is an interaction between training and autonomy: the value of one predictor modulates the effect of the other. This interaction is modeled by adding an extra term to the regression equation: \[ Y = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \beta_3 X_1 X_2 + \epsilon \] which is the product of the two terms. Note that \[ \beta_2 X_2 + \beta_3 X_1 X_2 = (\beta_2 + \beta_3 X_1) X_2 \] so the interaction coefficient \(\beta_3\) modulates the slope of \(X_2\): depending on the value of \(X_1\), \(X_2\) has a different effect on \(Y\).

Example

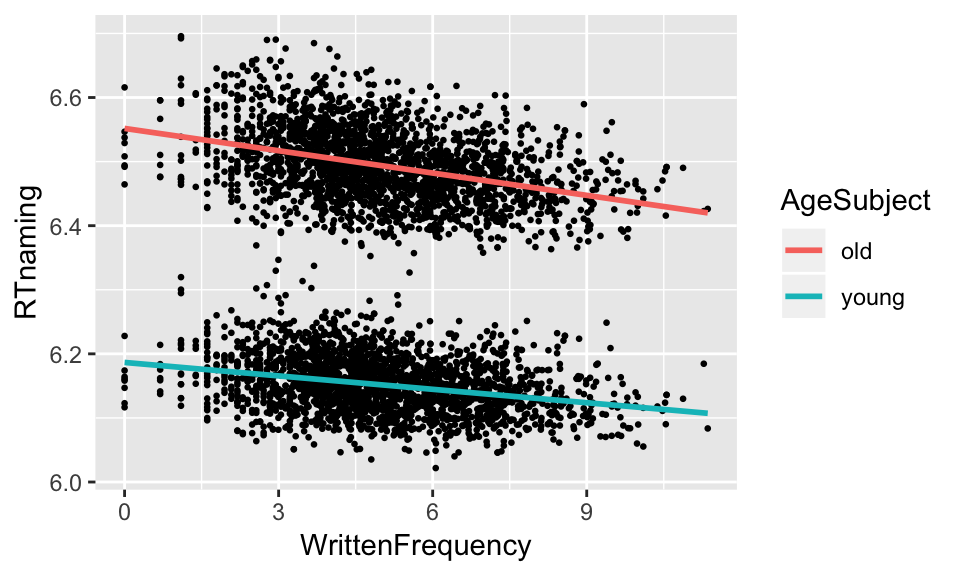

Returning to the example above, suppose we’d like to know how much the slope of WrittenFrequency does actually differ between old and young speakers, and whether the difference is statistically significant (\(\alpha = 0.05\)).

english %>% ggplot(aes(WrittenFrequency, RTnaming)) +

geom_point(size=0.5) +

geom_smooth(aes(group=AgeSubject, color=AgeSubject), method="lm", se=F)

X1:X2 means “interaction between X1 and X2, and the notation used in R for interactions is X1*X2, which expands automatically to X1 + X2 + X1:X2. (The non-interaction terms are sometimes called main effects.) Note that in R these are equivalent:

lm(RTnaming ~ WrittenFrequency * AgeSubject, english)

lm(RTnaming ~ WrittenFrequency + AgeSubject + WrittenFrequency:AgeSubject, english)To fit a model including an interaction between frequency and age:

m3 <- lm(RTnaming ~ WrittenFrequency * AgeSubject, english)In the summary of this model, of interest is the WrittenFrequency:AgeSubjectyoung row, which is the interaction effect:

summary(m3)##

## Call:

## lm(formula = RTnaming ~ WrittenFrequency * AgeSubject, data = english)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.160510 -0.033425 -0.002963 0.030855 0.181032

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.5517608 0.0029118 2250.09 < 2e-16

## WrittenFrequency -0.0116031 0.0005444 -21.31 < 2e-16

## AgeSubjectyoung -0.3651823 0.0041179 -88.68 < 2e-16

## WrittenFrequency:AgeSubjectyoung 0.0046191 0.0007699 6.00 2.13e-09

##

## (Intercept) ***

## WrittenFrequency ***

## AgeSubjectyoung ***

## WrittenFrequency:AgeSubjectyoung ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.04796 on 4564 degrees of freedom

## Multiple R-squared: 0.9278, Adjusted R-squared: 0.9278

## F-statistic: 1.956e+04 on 3 and 4564 DF, p-value: < 2.2e-16We see that there is indeed a significant interaction between WrittenFrequency and AgeSubject.

Questions:

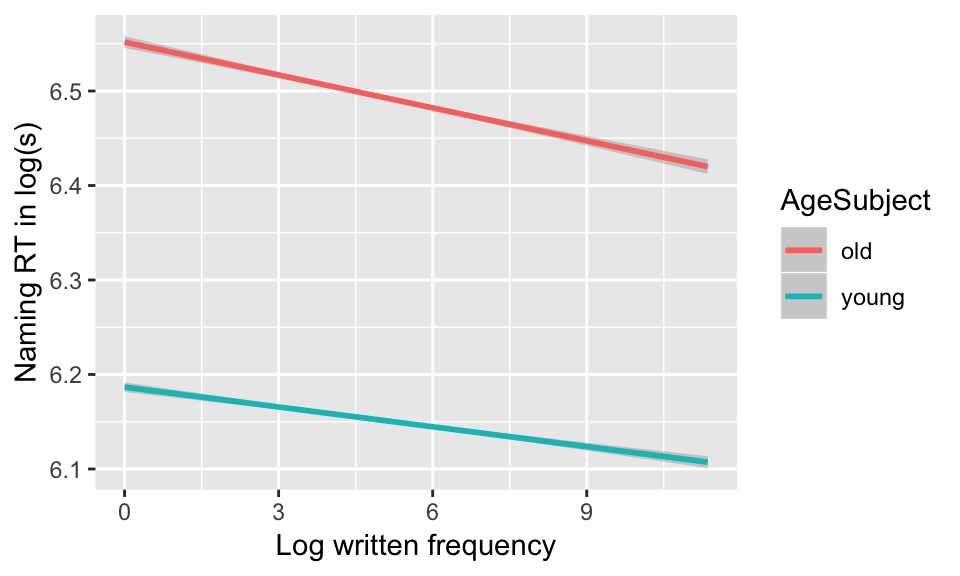

3.3.3 Plotting interactions

In general, making plots is indispensable for interpreting interactions. It is possible, with practice, to interpret interactions from the regression table, but examining a good plot is usually also necessary and much faster.

In later chapters we will cover how to actually visualize model predictions—exactly what the model predicts for different combinations of predictor values. You can usually get a reasonable approximation of this by making the relevant empirical plot, such as:

english %>% ggplot(aes(WrittenFrequency, RTnaming)) +

geom_smooth(method='lm', aes(color=AgeSubject)) +

xlab('Log written frequency') +

ylab('Naming RT in log(s)')

It is often better to use empirical plots to visualize interactions—even though, strictly speaking, you are not plotting the model’s predictions.

Pros: Empirical plots are more intuitive, and if you have a robust effect it should probably show up in an empirical plot.

Cons: Empirical plots don’t show actual model predictions, and in particular don’t control for the effects of other predictors.

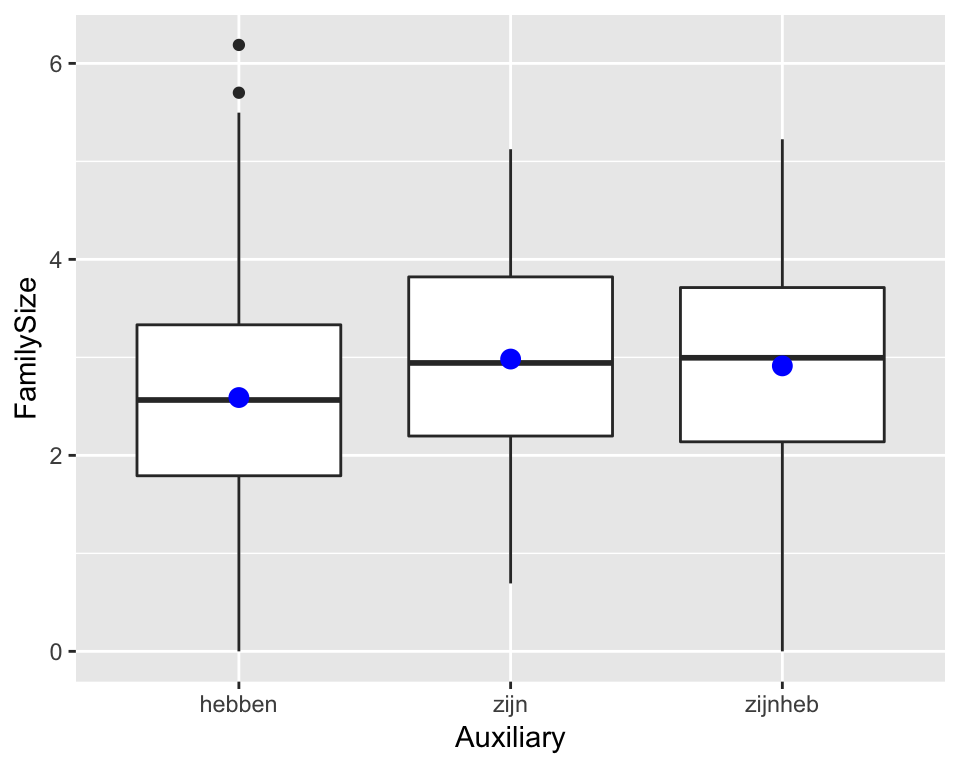

3.3.4 Categorical factors with more than two levels

We are often interested in categorical predictors with more than two levels. For example, for the Dutch regularity data, we might wonder whether the size of a verb’s morphological family size is affected by what auxiliary it takes in the past tense.

regularity %>% ggplot(aes(Auxiliary, FamilySize)) +

geom_boxplot() +

stat_summary(fun.y="mean", geom="point", color="blue", size=3) The relevant variable,

The relevant variable, Auxiliary, has three levels. Let’s see how this kind of variable is dealt with in a regression model.

Exercise

Fit a regression model predicting

FamilySizefromAuxiliary.What does the intercept (\(\beta_0\)) represent?

What do the two coefficients for

Auxiliary(\(\beta_1\), \(\beta_2\)) represent?

Hint: Compare \(\beta\) coefficients with group means, which you can check using summarise() from dplyr.

m4 <- lm(FamilySize ~ Auxiliary, regularity)

summary(m4)##

## Call:

## lm(formula = FamilySize ~ Auxiliary, data = regularity)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.9133 -0.7982 -0.0250 0.7442 3.5983

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.59000 0.04902 52.839 <2e-16 ***

## Auxiliaryzijn 0.39274 0.26780 1.467 0.1430

## Auxiliaryzijnheb 0.32329 0.12594 2.567 0.0105 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.177 on 697 degrees of freedom

## Multiple R-squared: 0.01169, Adjusted R-squared: 0.008855

## F-statistic: 4.123 on 2 and 697 DF, p-value: 0.0166Solution to (2): The value of FamilySize when Auxiliary=“hebben”.

Solution to (3): The predicted difference in FamilySize between Auxiliary=“zijn” and “hebben”, and between Auxiliary=“zijnheb” and “hebben”.

3.3.5 Releveling factors

It’s often useful for conceptual understanding to change the ordering of a factor’s levels. For the regularity example, we could make zijn the base level of the Auxiliary factor:

regularity$Auxiliary <-relevel(regularity$Auxiliary, "zijn")

m5 <- lm(FamilySize ~ Auxiliary, regularity)

summary(m5)##

## Call:

## lm(formula = FamilySize ~ Auxiliary, data = regularity)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.9133 -0.7982 -0.0250 0.7442 3.5983

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.98274 0.26328 11.329 <2e-16 ***

## Auxiliaryhebben -0.39274 0.26780 -1.467 0.143

## Auxiliaryzijnheb -0.06946 0.28771 -0.241 0.809

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.177 on 697 degrees of freedom

## Multiple R-squared: 0.01169, Adjusted R-squared: 0.008855

## F-statistic: 4.123 on 2 and 697 DF, p-value: 0.0166Questions:

- What is the interpretation of the intercept and the two

Auxiliarycoefficients in this new model?

3.4 Linear regression assumptions

Up to now, we have discussed regression models without worrying about the assumptions that are made by linear regression, about your data and the model. We will cover six main assumptions, the first four have to do with the form of the model and errors:

Linearity

Independence of errors

Normality of errors

Constancy of errors (homoscediasticity)

followed by two assumptions about the predictors and observations:

Linear independence of predictors

Observations have roughly equal influence on the model

We’ll discuss each in turn.

Our presentation of regression assumptions and diagnostics is indebted to Chapters 4, 6 and 9 of Chatterjee & Hadi (2012), where you can find more detail.

3.4.1 Visual methods

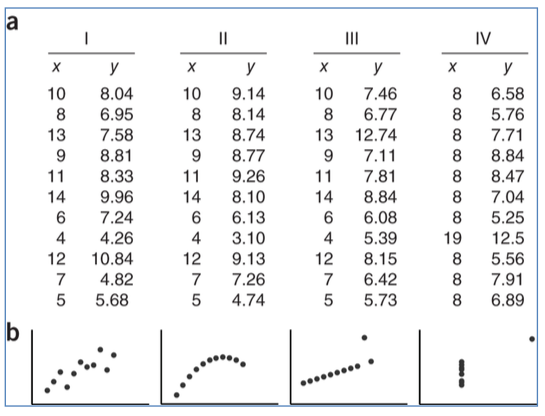

Visualization is crucial for checking model assumptions, and for data analysis in general. A famous example illustrating this is Anscombe’s quartet: a set of four small datasets of \((x,y)\) pairs with:

The same mean and variance for \(x\)

The same mean and variance for \(y\)

A correlation(\(x\), \(y\)) = 0.816

The same regression line (\(y = 3 + 0.5\cdot x\))

(Source: unknown, but definitely taken from somewhere)

With more than one predictor it becomes difficult to check regression assumptions by just plotting the data, and visual methods such as residual plots (presented below) are crucial.

3.4.2 Assumption 1: Linearity

The first assumption of a linear regression model is that the relationship between the response (\(Y\)) and predictors (\(X_i\)) is… linear.

While obvious, this assumption is very important: if it is violated, the model’s predictions can be in serious error.

The linearity assumption can be partially checked by making a scatterplot of \(Y\) as a function of each predictor \(X_i\). It is hard to exhaustively check linearity for MLR, because nonlinearity might only become apparent when \(Y\) is plotted as a function of several predictors.

Example

Consider relative pitch, intensity, and duration in the alternatives dataset.

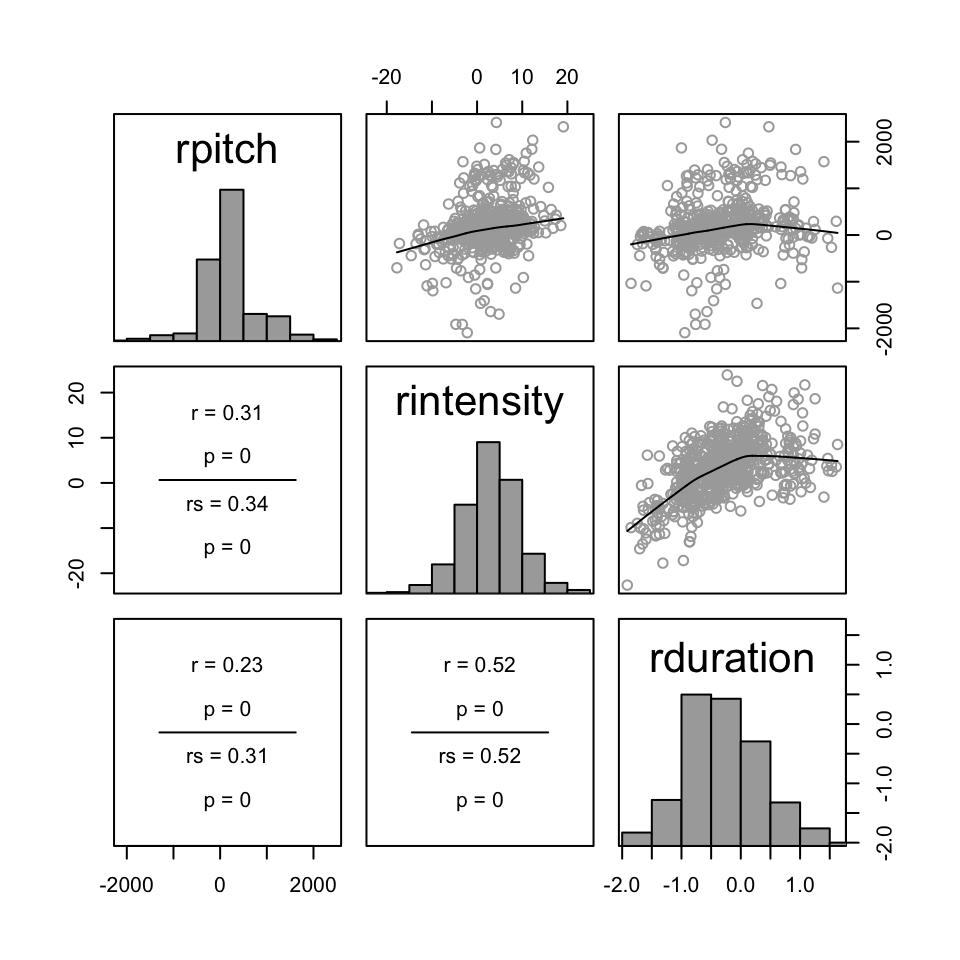

We can make pairwise plots of these variables using the pairscor.fnc() function languageR, to see if any of these variables might be a function of the other two.

pairscor.fnc(with(alt, cbind(rpitch, rintensity, rduration)))

Questions:

- Is this the case?

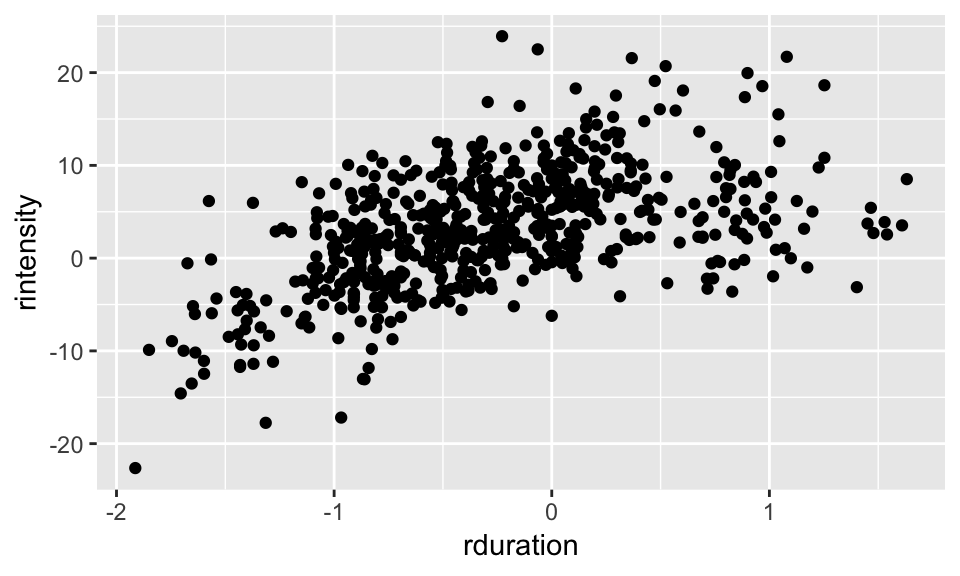

Let’s examine the relationship between realtive duration and relative intensity more closely:

alt %>% ggplot(aes(rduration, rintensity)) +

geom_point()

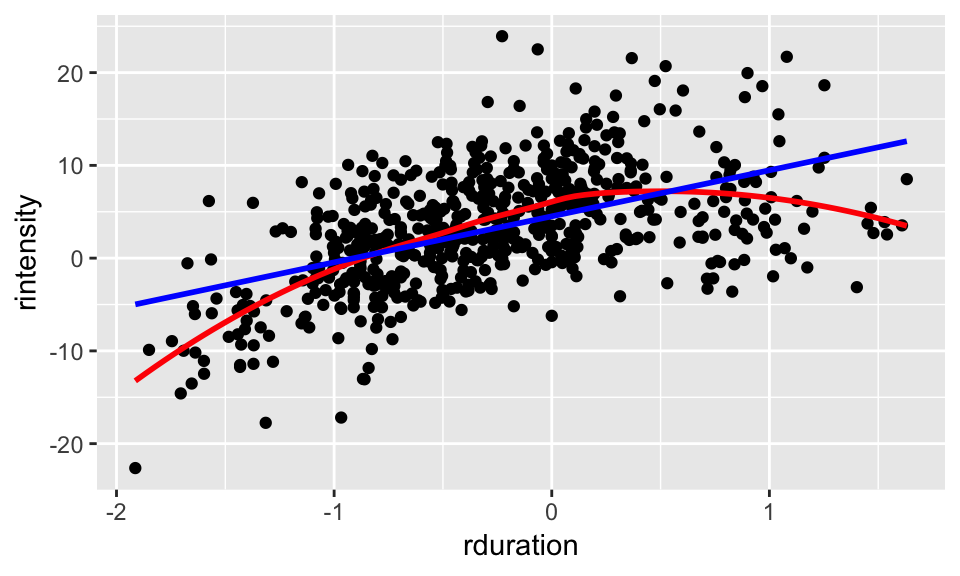

We can try to fit a line to this data, but if we compare to using a nonlinear smoother, it seems clear that the relationship is not linear:

alt %>% ggplot(aes(rduration, rintensity)) +

geom_point() + geom_smooth(col='red', se=F) +

geom_smooth(method="lm", col="blue", se=F)

In particular, it looks like there is a quadratic trend. This means that we can in fact fit a linear regression, we just need to include coefficients for both rduration and its square, like so:

mq <- lm(rintensity ~ rduration + I(rduration^2), alt)

summary(mq)##

## Call:

## lm(formula = rintensity ~ rduration + I(rduration^2), data = alt)

##

## Residuals:

## Min 1Q Median 3Q Max

## -16.3199 -3.5197 -0.2448 2.9515 19.1480

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.8280 0.2622 22.228 <2e-16 ***

## rduration 3.8841 0.3263 11.904 <2e-16 ***

## I(rduration^2) -3.1381 0.3429 -9.151 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.05 on 627 degrees of freedom

## Multiple R-squared: 0.358, Adjusted R-squared: 0.3559

## F-statistic: 174.8 on 2 and 627 DF, p-value: < 2.2e-16We will cover more nonlinear functions of predictors in a later chapter. The important point here is that a model with just rduration as a predictor would have violated the linearity assumption, but a model with both rduration and rduration^2 as predictors doesn’t (arguably).

3.4.3 Assumption 2: Independence of errors

All regression equations we have considered contain an error or residual term: \(\epsilon_i\) for the \(i^{\text{th}}\) observation. A crucial assumption is that these \(\epsilon_i\) are independent: knowing the error for one observation shouldn’t tell you anything about the error for another observation.

Unfortunately, violations of this assumption are endemic in realistic data. The simplest example is in time series, or longitudinal data—such as pitch measurements taken every 10 msec in a speech signal.

Questions:

- Can you think of why this might be the case?

In linguistics and psycholinguistics, violations of the independence assuption are common because most datasets include multiple observations per participant or per item (or per word, etc.). Crucially, violations of the independence assumption are often anti-conservative: CIs will be too narrow and \(p\)-values too small if the lack of independence of errors is not taken into account by the model.

Some solutions to these issues:

Paired-t-tests, where applicable (binary predictor; two measures per participant)

Mixed-effects regression (more general solution, major focus later this term)

Until we cover mixed-effects regression, we will be getting around the fact that the independence-of-errors assumption usually doesn’t hold for linguistic data, in one of two ways:

Selectively using datasets where this assumption does hold, such as

regularity.Analyzing datasets where this assumption does not hold, such as

tappingorenglish, using analysis methods that do assume indepedence of errors (such as linear regression), with the understanding that our regression models are probably giving results that are “wrong” in some sense.

3.4.4 Assumption 3: Normality of errors

The next major assumption is that the errors \(\epsilon_i\) are normally distributed, with mean 0 and a fixed variance. This assumption is impossible to check directly, because we never observe the true errors \(\epsilon_i\), only the residuals \(e_i\). The residuals are no longer normally distributed (even if the errors are), because some observations will be more influential than others in determining the fitted responses \(\hat{y}_i\) when fitting the least-squares estimates of the regression coefficients.

Example

young <- filter(english, AgeSubject=='young')

set.seed(2903)

young_sample <- young %>% sample_n(100)

ggplot(young_sample, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.5) +

geom_smooth(method="lm") +

ggtitle("Linear regression line and 95% CI")

The width of the confidence interval increases for points further from (average of WrittenFrequency, average of RTlexdec), because these points are more influential, causing the variance of the residuals to increase—thus, the variance is not constant.

In order to correct for non-normality, the residuals are transformed in a way which accounts for the different influence of different observations (see e.g. Chatterjee & Hadi (2012) 4.3), to studentized or standardized residuals.13 (In R, by applying rstudent or rstandard to a fitted model.)

In general, we check assumptions about errors by examining the distribution of standardized residuals. This is because if the normality of errors assumption holds, then the standardized residuals will be normally distributed with mean 0 and fixed variance. So if they are not, we know the normality of errors assumption does not hold. (If they are, it’s not a guarantee that the normality of errors assumption holds, but we hope for the best.)

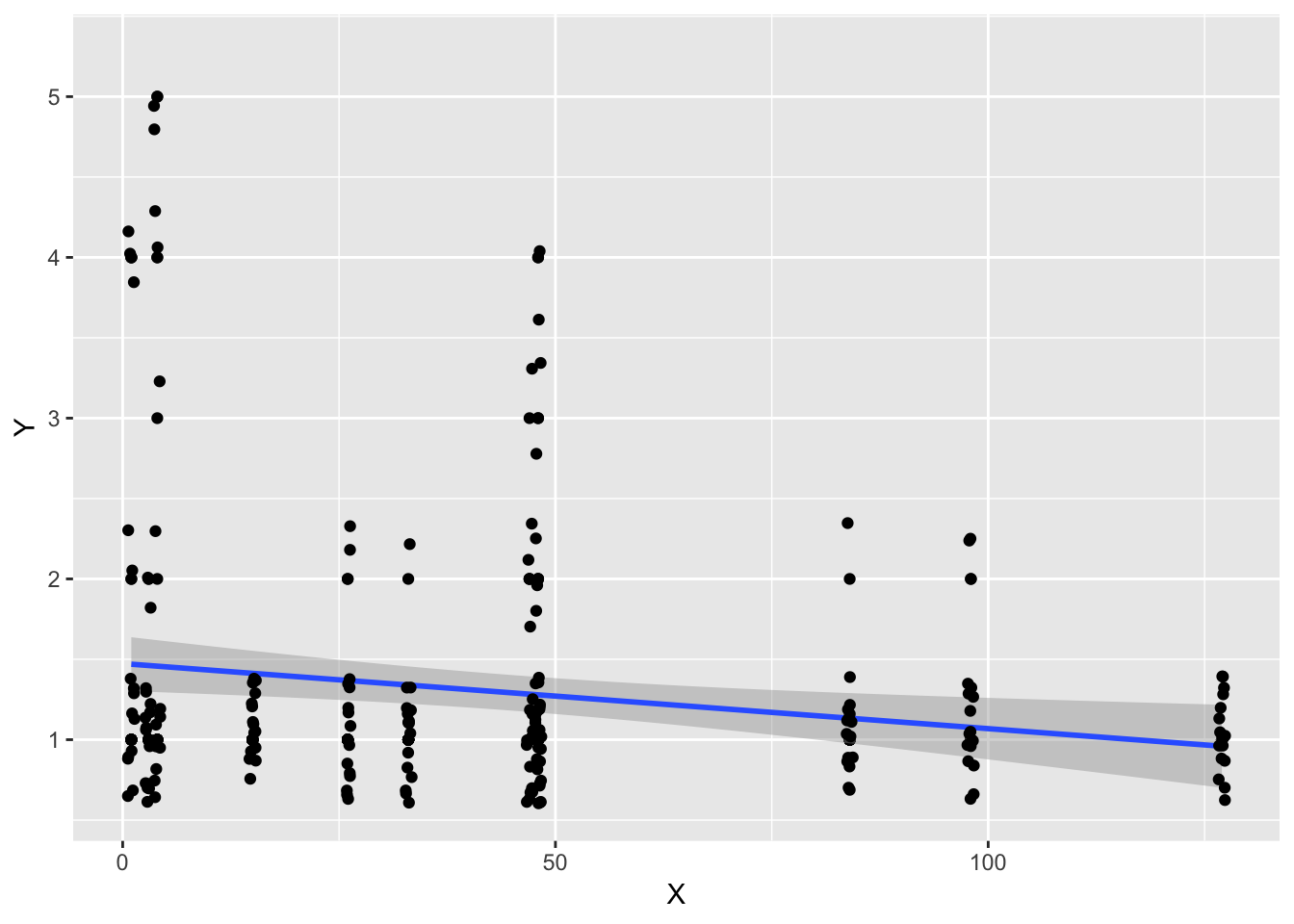

Example

This exercises uses the halfrhyme data, briefly described here. Let’s abstract away from what the variables actually mean, and just think of them as \(Y\) and \(X\):

ggplot(aes(x=cohortSize, y=rhymeRating), data=filter(halfrhyme, conditionLabel=='bad')) +

geom_point() + geom_smooth(method='lm') +

geom_jitter() + xlab("X") + ylab("Y")

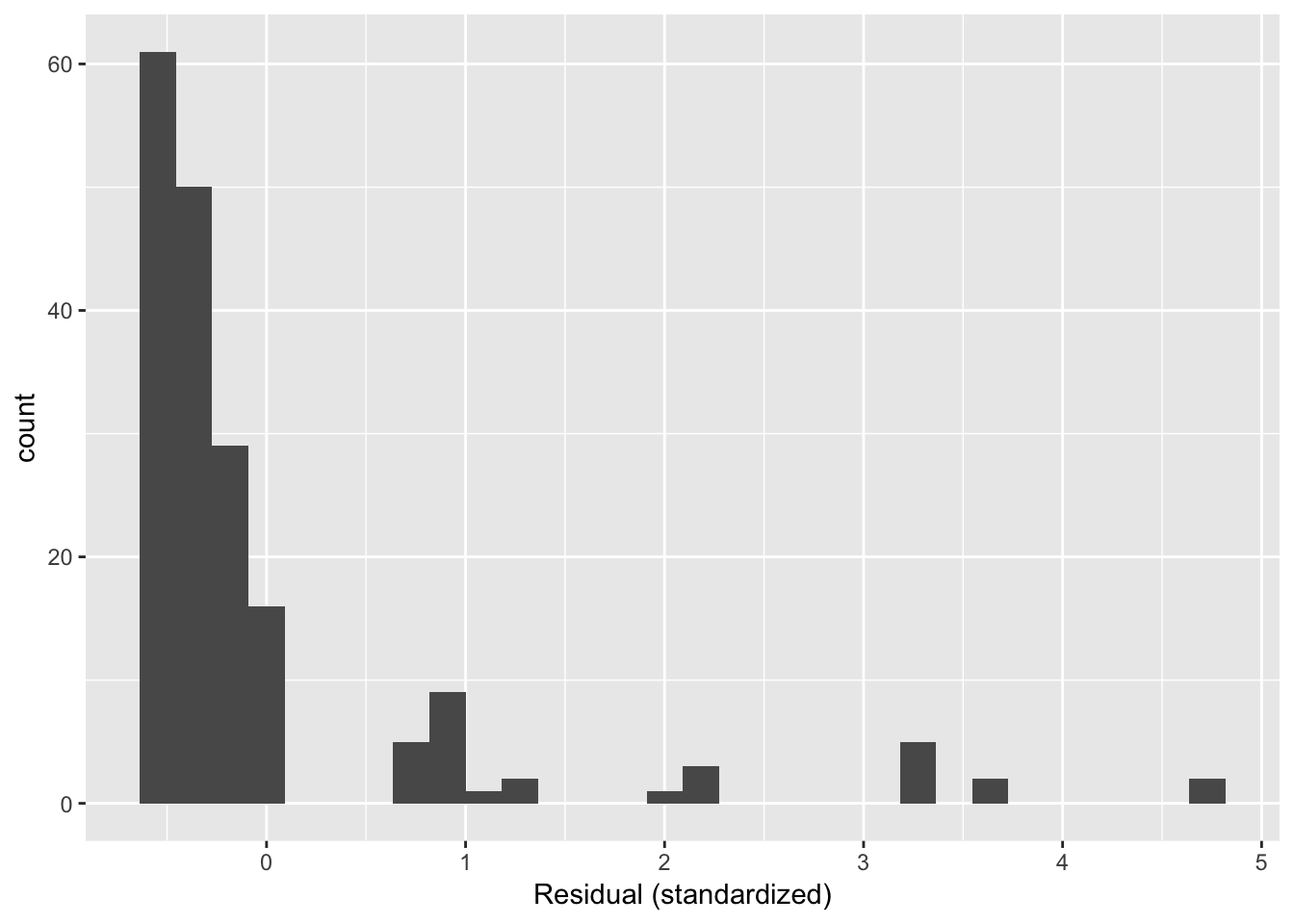

The distribution of the standarized residuals for the regression of \(Y\) as a function of \(X\) is:

halfrhyme.sub <- filter(halfrhyme, conditionLabel=='bad' & !is.na(cohortSize))

mod <- lm(rhymeRating ~ cohortSize, data=halfrhyme.sub)

halfrhyme.sub$resid <- rstandard(mod)

ggplot(aes(x=resid), data=halfrhyme.sub) + geom_histogram() + xlab("Residual (standardized)")

Questions:

- Why do the residuals have this distribution?

This example illustrates probably the most common source of non-normal residuals: a highly non-normal distirbution of the predictor or response.

3.4.4.1 Effect and solution

Non-normality of residuals is a pretty common violation of regression assumptions. How much does it actually matter? Gelman & Hill (2007) (p. 46) argue “not much”, at least in terms of the least-squares estimates of the regression line (i.e., the regression coefficient values), which is often what you are interested in.

However, non-normality of residuals, especially when severe, can signal other issues with the data, such as the presence of outliers, or the predictor or response being on the same scale. (Example: using non-log-transformed word frequency as a predictor.) Non-normality of residuals can often be dealt with by transforming the predictor or response to have a more normal distribution (see Sec. 3.4.7).

Non-normality of residuals can also signal other errors, such as an important predictor missing.

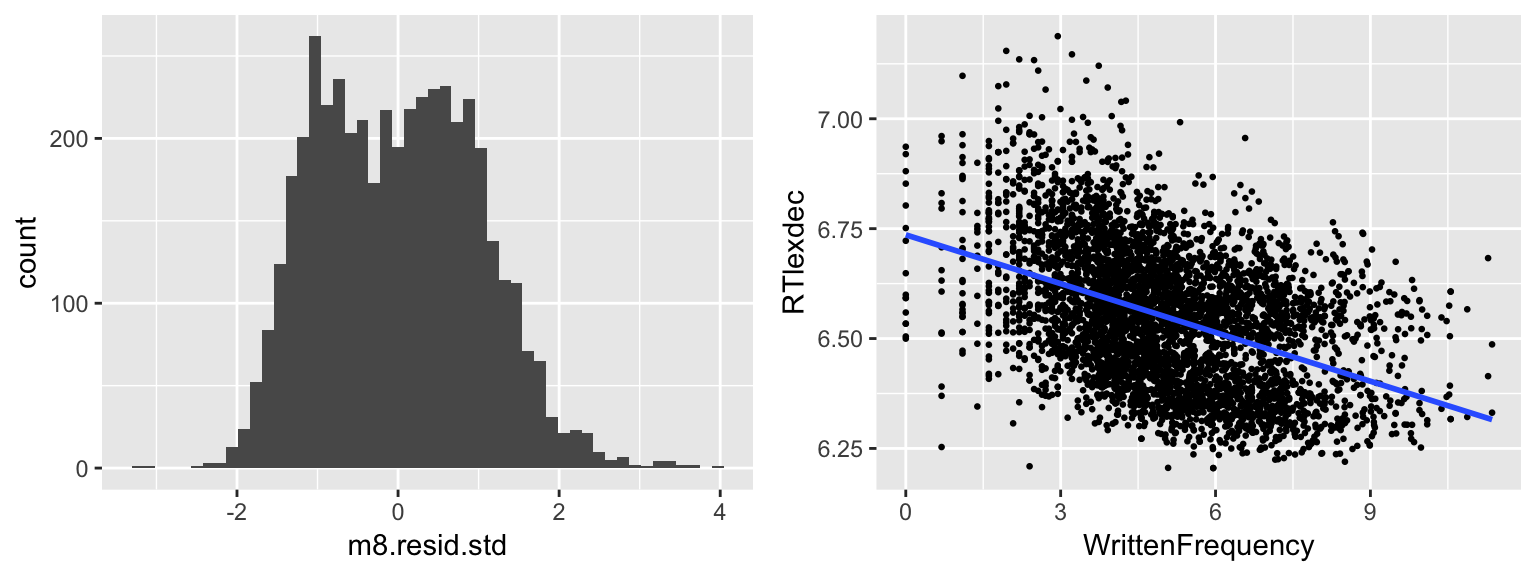

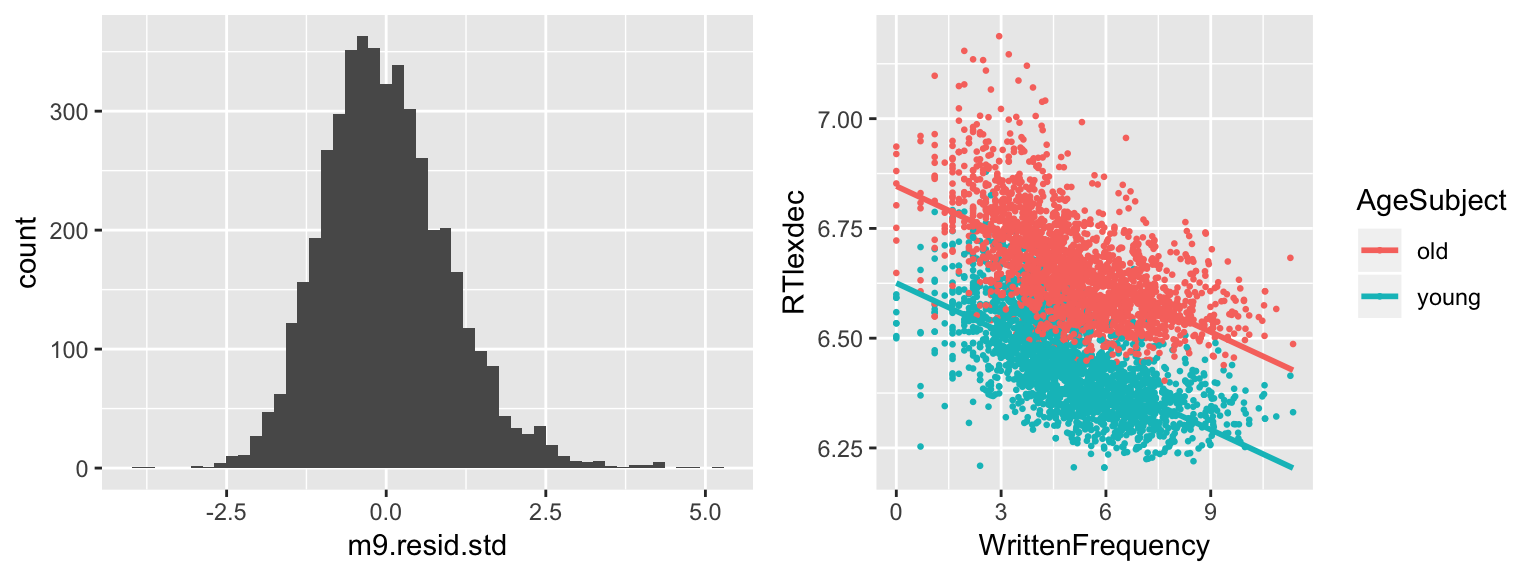

Exercise

- Using the

englishdata, plotRTlexdecas a function ofWrittenFrequency, and add a linear regression line.

ggplot(english, aes(x = WrittenFrequency, y = RTlexdec)) +

geom_point(size = 0.5) +

geom_smooth(method = "lm", se=F)

Do you think the residuals of this model are normally distributed? Why/why not?

Now plot a histogram of the standardized residuals of the mode. Does the plot confirm your first impressions?

m8 <- lm(RTlexdec ~ _______, english)

m8.resid.std <- rstandard(______)

hist(______, breaks = 50)day9_plt1 <- ggplot(english, aes(WrittenFrequency, RTlexdec)) +

geom_point(size=0.5) +

geom_smooth(method="lm", se=F)

m8 <- lm(RTlexdec~WrittenFrequency, english)

m8.resid.std <- rstandard(m8)

day9_plt2 <- ggplot(data.frame(m8.resid.std), aes(x = m8.resid.std)) +

geom_histogram(bins = 50)

grid.arrange(day9_plt2, day9_plt1, ncol = 2)

- Now add

AgeSubjectto the model, and plot a histogram of its standardized residuals. What has changed? Why so?

This exercise shows one reason that examining the residual distribution is useful. If we didn’t already know what the missing predictor was, the non-normality of the residual distribution gives us a way to look for an explanatory variable. (Look at observations in each mode of the distribution, see what they have in common.)

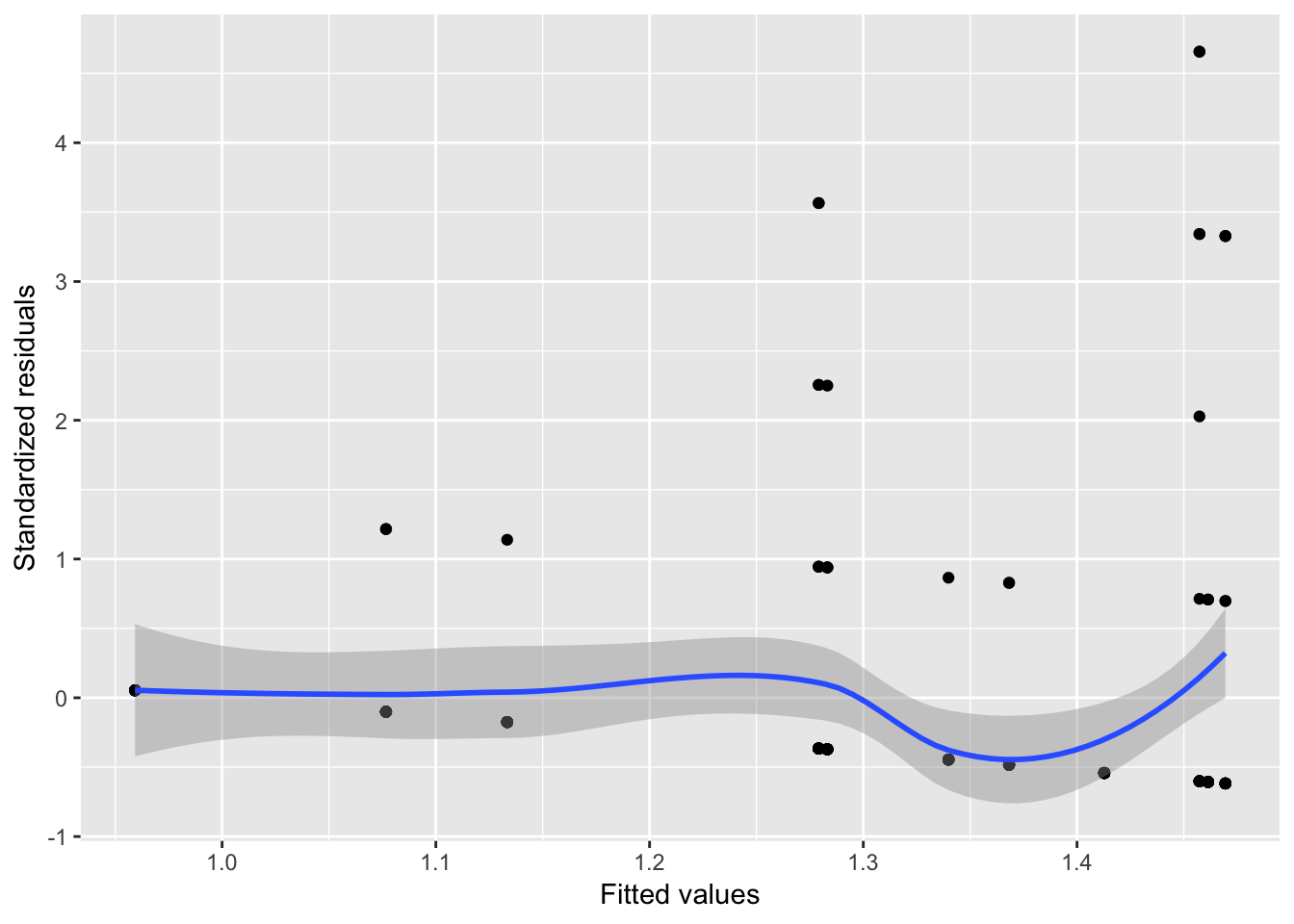

3.4.5 Assumtion 4: Constancy of variance

Homoscedasticity is one of the trickier regression assumptions to think about: the assumption that \(\epsilon_i\) is normally distributed with the same variance, across all values of the predictors.

For example, in our example modeling reaction time as a function of subject age and word frequency, it is assumed that the amount of variability in reaction time is similar for old speakers and young speakers, for high frequency words and young speakers, for observations of high frequency words for old speakers, and so on.

In the example above from the halfrhyme data, the homoscedasticity assumption is violated: lower values of \(\hat{y}\) show higher variance in the residuals.

In this case, the model shows heteroscedasticity.

If homoscedasticity holds, then the standardized residuals are uncorrelated with the predictor values, and with the fitted values, and there should be a constant spread (variance) of residual values (y-axis) for each fitted or predictor value (x-axis). Thus, it is common to plot (standardized) residuals versus fitted values and versus predictors. (The fitted values-residuals plot is one of the diagnostic plots that shows up if you plot(mod) in R, where mod is a fitted model.) The desired pattern is a flat line, with the same variance for different x-axis values.

For the halfrhyme data, the fitted value-residuals plot looks like:

halfrhyme.sub <- filter(halfrhyme, conditionLabel=='bad' & !is.na(cohortSize))

mod <- lm(rhymeRating ~ cohortSize, data=halfrhyme.sub)

halfrhyme.sub$resid <- rstandard(mod)

halfrhyme.sub$fitted <- fitted(mod)

ggplot(aes(x=fitted, y=resid), data=halfrhyme.sub) + geom_point() + geom_smooth() + xlab("Fitted values") + ylab("Standardized residuals")

There is greater variance for higher fitted values, indicating heteroscedacticity.

3.4.5.1 Effect and solution

In general, estimates of least-squares coefficients in the presence of heteroscedasticity are unbiased, but standard errors will be under- or over-estimated. This means that confidence intervals will be too narrow/wide and \(p\)-values too low/high.

Heteroscedasticity is endemic in some types of data, such as from lexical statistics (R. Baayen (2008), p. 35). In other types of data, such as economic data, heteroscedasticity is so common that dealing with it is a primary concern in statistical analysis. Heteroscedasticity is discussed less frequently than other regression assumptions for linguistic data, but it is unclear whether this is because heteroscedasticity is less common than in other types of data or just has not been focused on by language scientists.

One can often correct for heteroscedasticity by using various transformations of the response and predictors to get better estimates (Chatterjee & Hadi (2012), Ch. 4). For example, in the halfrhyme example, it turns out that a stronger effect of \(X\) on \(Y\) (lower \(p\)-value) can be detected once variance is stabilized.

3.4.6 Interim summary

Linearity

Serious violation if not met.

Fit data with non-linear trend (e.g. quadratic)

Transformed predictor/response to normality (e.g. log-transform)

Independence of error:

- In linguistic data: use mixed-effects regression14

Normality of errors:

Not too serious violation if not met, but may signal issues with model/data

Remove outliers; transform \(X\)/\(Y\) to normality

Constancy of variance:

Not commonly checked in linguistic data

Leads to uncertain regression estimates

Transform predictor/response to normality

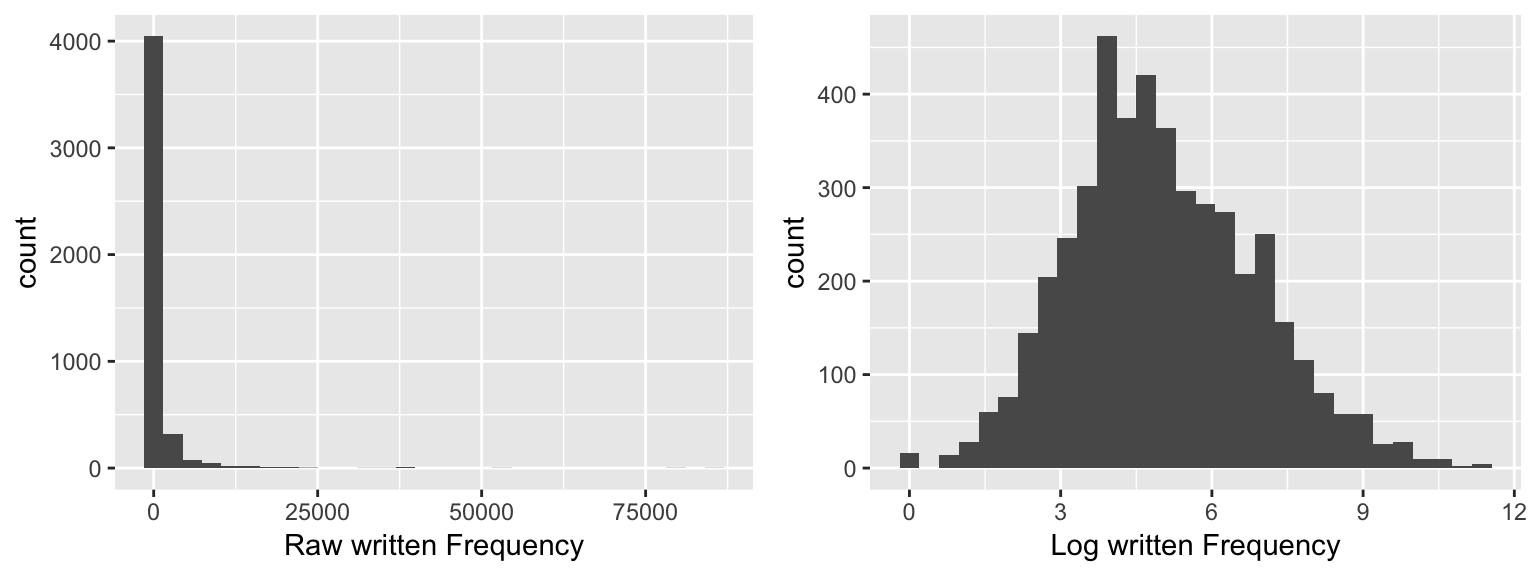

3.4.7 Transforming to normality

Normality of the distribution of the response and predictors (\(Y\) and \(X_i\)) is not an assumption of linear regression. This is a common misconception, perhaps because normality is an assumption of other basic statistical inference tools, such as \(t\)-tests.

However, there is still good reason to be circumspect if \(Y\) or \(X_i\) are not normally distributed, because this can often lead to violations of regression assumptions. This is why it is recommended to transform the predictors and response to normality to fix violations of the linearity, normality of errors, and homoscedasticity assumptions. Because non-normality of \(Y\) or \(X_i\) can easily lead to violations of regression assumptions, it is sometimes recommended to transform them to normality just to be safe. This makes it less likely that a regression assumption will be violated, but also changes the interpretation of the transformed variable, which may make it harder to interpret the model’s results.

For linguistic data, logarithmic transformations are often useful when working with skewed distributions, because many kinds of linguistic data are roughly log-normally distributed, meaning the log-transformed variable is normally distributed. Some examples:

Lexical statistics (e.g. lexical frequency, probability)

Reaction times (e.g. naming latencies)

Duration measures in phonetics (syllable, phrase durations)

Other transformations besides log are also used: reaction times are sometimes inverse or inverse-log-transformed (1/RT, log(1/RT)), and durations are sometimes square-root-transformed.

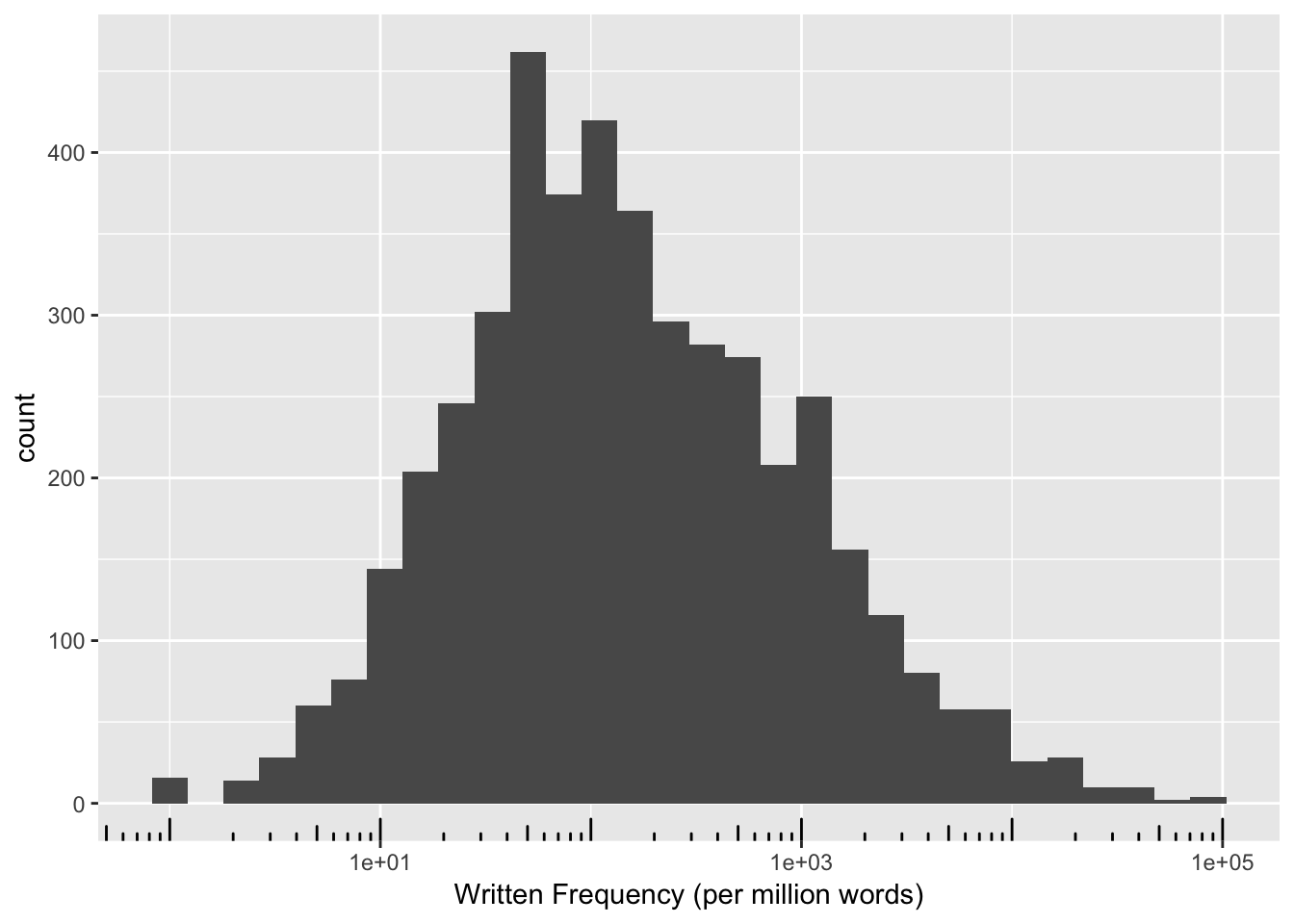

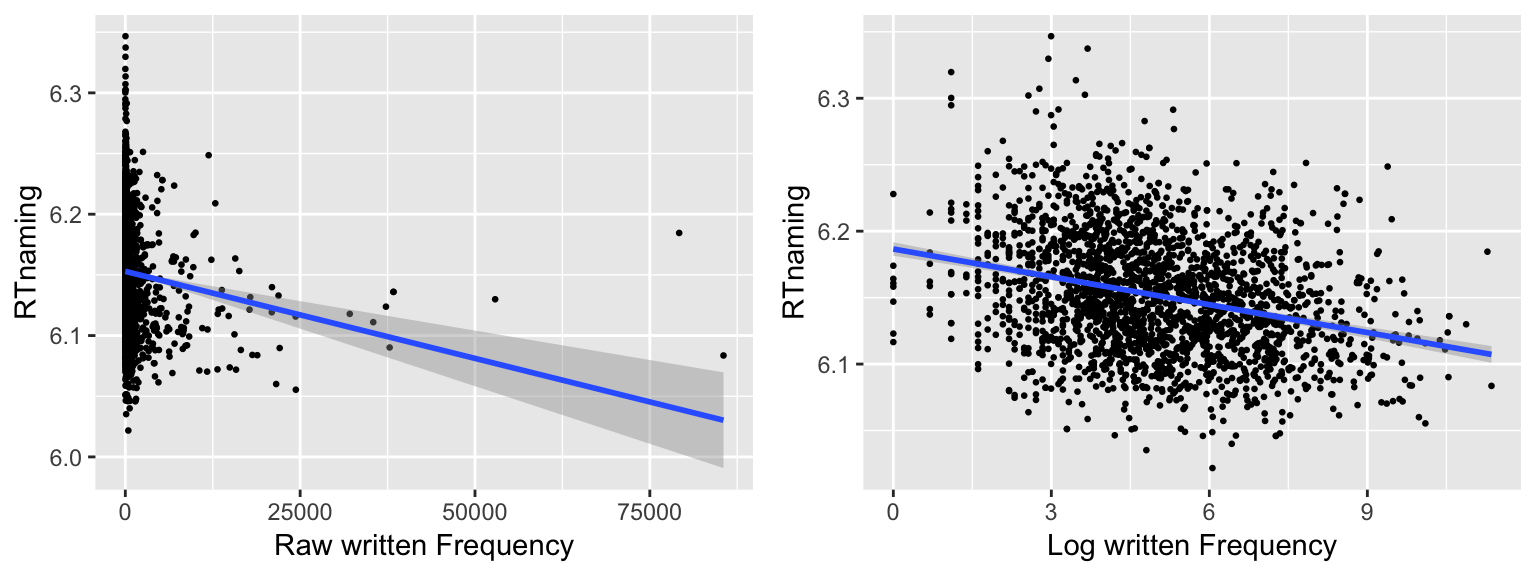

Example: Distribution of raw vs. log lexical frequency

english$WrittenFrequency_raw <- exp(english$WrittenFrequency)

english$WrittenFrequency_log <- english$WrittenFrequency

day10_plt1 <- ggplot(english, aes(x = WrittenFrequency_raw)) +

geom_histogram() +

xlab("Raw written Frequency")

day10_plt2 <- ggplot(english, aes(x = WrittenFrequency_log)) +

geom_histogram() +

xlab("Log written Frequency")

grid.arrange(day10_plt1, day10_plt2, ncol = 2)

R note: ggplot functions such as scale_x_log10() can be used to plot data in its raw units on a log scale, which is often more interpretable. Ex:

ggplot(english, aes(x = WrittenFrequency_raw)) + geom_histogram() + scale_x_log10() +

xlab("Written Frequency (per million words)") + annotation_logticks(sides='b')

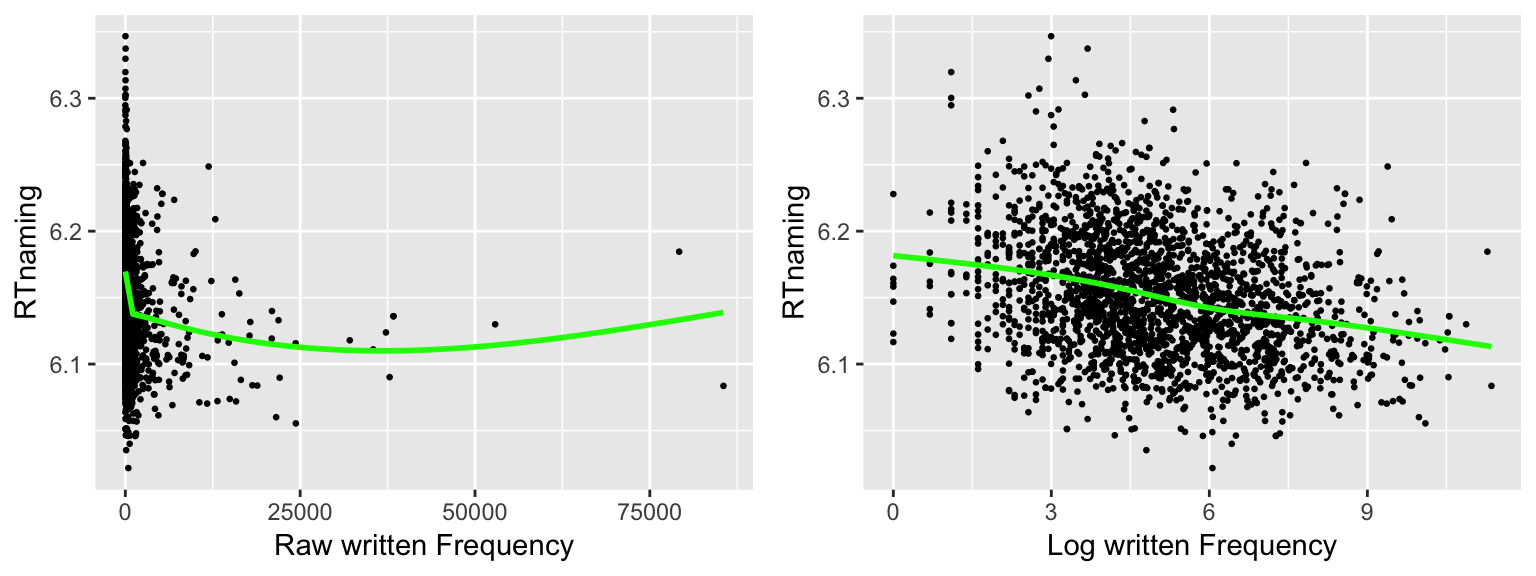

Exercise

Consider the raw and log frequency measures for the english dataset, for young speakers:

english$WrittenFrequency_raw <- exp(english$WrittenFrequency)

english$WrittenFrequency_log <- english$WrittenFrequency

young <- filter(english, AgeSubject=="young")- Plot

RTnamingas a function of raw written frequency, and of log written frequency, with a green LOESS (smooth) regression line added to each plot (usinggeom_smooth(color='green')).

plt5 <- ggplot(young, aes(WrittenFrequency_raw, RTnaming)) +

geom_point(size=0.5) +

xlab('Raw written Frequency') +

geom_smooth(se=F, color="green")

plt6 <- ggplot(young, aes(WrittenFrequency_log, RTnaming)) +

geom_point(size=0.5) +

xlab('Log written Frequency') +

geom_smooth(se=F, color="green")

grid.arrange(plt5, plt6, ncol = 2)

- Is the linearity assumption met in each case?

3.4.8 Assumption 5: Linear independence of predictors

A crucial assumption of linear regression is that the predictors are linearly independent, meaning it isn’t possible to write one predictor as a linear function of the others. If you can do so, it’s impossible to disentangle the effect of different predictors on the response. For example, temperatures in farenheit and centigrade are related by a linear function (F = \(9/5\)C + 32), so F and C are linearly dependent.

Another way of thinking of linear dependence is that in a linear regression where you predict one predictor as a function of the others, the \(R^2\) would be 1. Linear dependence of predictors will either give a model error or a weird model output if you fit a linear regression in R, because the math to find least-squares estimates of coefficients doesn’t work out if there is linear dependence: there is no longer a unique optimal solution. (For example, if the slope of F and C in a model were \(\beta_1 = 2\) and \(\beta_2 = 0\), then a different model using slopes of 0 and \(10/9\) would also work.)15

Exercise

Define a new variable in the english dataset that is the average of WrittenFrequency and Familiarity, then fit a linear regression of RTlexdec as a function of this new variable, WrittenFrequency, and Familiarity. What looks odd in the model output?

3.4.9 Collinearity

Full linear dependence of predictors is usually a sign that something is conceptually wrong with your data, or your model structure. In the farenheit/celsius example, it doesn’t make conceptual sense to have both as predictors of anything.

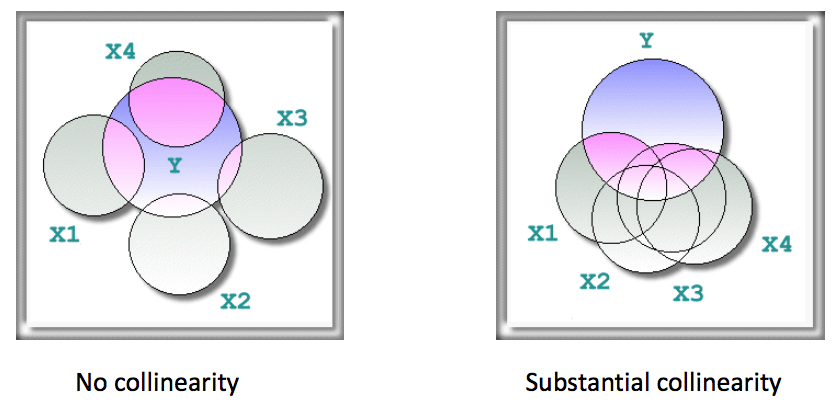

However, it is very common for there to be partial dependence between predictors—that is, \(0 < R^2 < 1\) when you regress one predictor on the others. This is called multicollinearity, or just collinearity.16 Collinearity is ubiquitous in lingusitic data, and can significantly affect the estimates and interpretations of regression coefficients—particularly when collinearity is “high” (say, \(|R|>0.8\)) However, (high) collinearity is not a violation of the assumptions of linear regression!

This figure may be useful to get an intuitive sense of what collinearity is, if you think of X1-X4 as four predictors which affect Y, and may be highly interrelated (right figure) or independent (left figure).

(Source: http://www.creative-wisdom.com/computer/sas/collinear_stepwise.html)

There can be collinearity among several predictors—hence the term “multicollinearity”—where one predictor is a linear function of several others.

Exercise

Suppose we are trying to predict the duration of the first vowel of every word in a dataset of conversational speech, using these four predictors:

log(speaking rate)

log(# of syllables in the word)

log(duration of word)

log(first syllable duration)

where “speaking rate” is defined as “syllables per second”.

Why are these predictors linearly dependent?

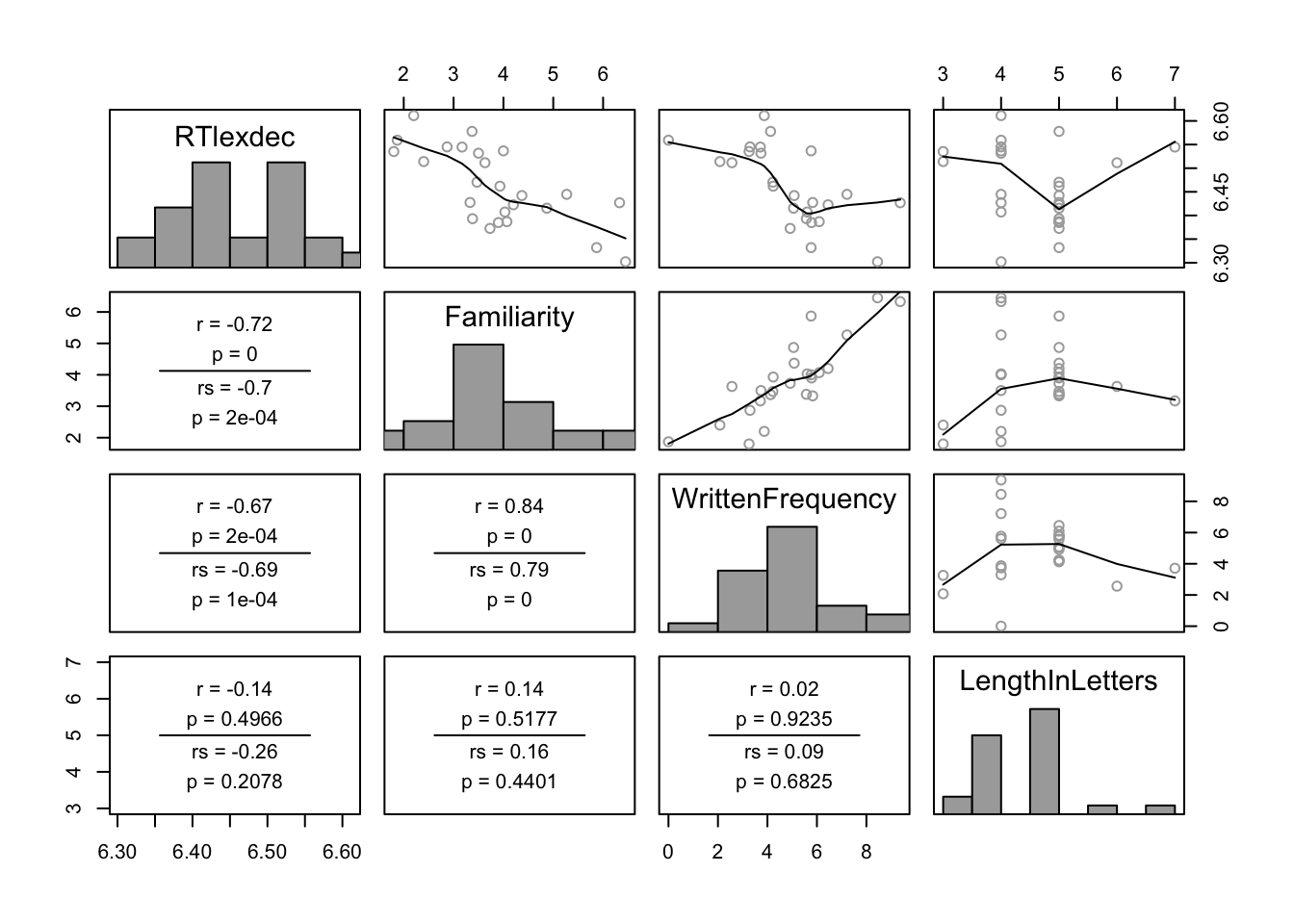

Exercise

Let us simulate a small-scale experiment (\(n=25\)), using data from young speakers in the english dataset. We measure RT, take Familiarity norms from a previous study, and would like to control for the following variables:

WrittenFrequencyLengthInLetters

Because both are expected to correlate with Familiarity, and to affect RT.

We first examine whether there is collinearity among predictors, then assess whether familiarity affects lexical decision RT.

young <- filter(english, AgeSubject == "young")

set.seed(2903)

d <- sample_n(young, 25)

pairscor.fnc(dplyr::select(d, RTlexdec, Familiarity, WrittenFrequency, LengthInLetters))

Questions:

- What correlations are present in the data? Is there collinearity among predictors?

Now, fit a model of just familiarity on RT:

m.fam <- lm(RTlexdec ~ Familiarity, d)

summary(m.fam)##

## Call:

## lm(formula = RTlexdec ~ Familiarity, data = d)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.094956 -0.035753 0.002142 0.050448 0.093090

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.645471 0.038231 173.83 < 2e-16 ***

## Familiarity -0.047697 0.009502 -5.02 4.44e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.05699 on 23 degrees of freedom

## Multiple R-squared: 0.5228, Adjusted R-squared: 0.502

## F-statistic: 25.2 on 1 and 23 DF, p-value: 4.442e-05This model finds a highly significant effect of word familiarity on RT.

Questions:

- What is the interpretation of this model—what relationship is it capturing from the grid of plots above?

Now,

m.fam_freq <- lm(RTlexdec ~ Familiarity + WrittenFrequency + LengthInLetters, d)

summary(m.fam_freq)##

## Call:

## lm(formula = RTlexdec ~ Familiarity + WrittenFrequency + LengthInLetters,

## data = d)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.090416 -0.040823 -0.008442 0.042555 0.094739

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.66953 0.07094 94.013 <2e-16 ***

## Familiarity -0.03333 0.01821 -1.831 0.0814 .

## WrittenFrequency -0.01017 0.01117 -0.911 0.3728

## LengthInLetters -0.00643 0.01410 -0.456 0.6530

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.05838 on 21 degrees of freedom

## Multiple R-squared: 0.5429, Adjusted R-squared: 0.4776

## F-statistic: 8.313 on 3 and 21 DF, p-value: 0.0007782This model finds that none of the three variables significantly affect RT

Questions:

Why has the

Familiarityeffect changed between the two models? (Examine both the coefficient estimates and SEs.)How is it possible that none of the three variables significantly affect RT, but together they do predict half the variation in RT (\(R^2 = 0.54\))?

This kind of situation is called a credit assignment problem: we can tell that some combination of predictors together affects the response, but not whether an individual predictor does, after controlling for other predictors.

3.4.9.1 Effects of collinearity

In general, collinearity increases Type II error: the probability of concluding a predictor has no effect on the response, when it actually does. Collinearity does not in general affect the actual values of coefficient estimates, just their standard errors.17

Importantly, collinearity is a property of the data—not a violation of model assumptions. To the extent that an effect is “missed” (a Type II error) in the presence of collinearity, this is because of the structure of the data. In the example above, it makes intuitive sense that it is harder to detect a “real” effect of Familiarity when frequency is added as a predictor, because of the correlation between Familiarity and frequency.

Because collinearity isn’t a violation of model assumptions, high collinearity cannot be diagnosed using diagnostic plots, such as those considered for Assumptions 1-4 above.

3.4.9.2 Diagnosing collinearity

How can one then detect collinearity, and decide if it could be affecting (the standard errors of) regression coefficients?

Some warning signs that there may be substantial collinearity in your data (Chatterjee & Hadi (2012), 9.4):

Unstable coefficients: large changes in values of the \(\hat{\beta}_i\) when predictors/data points are added/dropped.

Nonsensical coefficients: signs of \(\hat{\beta}_i\) don’t conform to prior expectations.

Unexpected non-significance: values of \(\hat{\beta}_i\) for predictors expected to be important (e.g. from EDA) have large SEs, low \(t\)-values, and high \(p\)-values.

These diagnostics all follow from the fact that when data is highly collinear, a linear relationship between predictors almost holds, so there are many regression coefficient estimates that give models almost as good as the least-squared estimates.

The degree of collinearity can be quantified using the condition number (collin.fnc in languageR), applied to the design matrix (where each column = values of one predictor, across the dataset). For example, for frequency and AoA in the example above, the condition number is:

library(languageR)

collin.fnc(dplyr::select(d, Familiarity, WrittenFrequency, LengthInLetters))$cnumber## [1] 16.0162As a rule of thumb, condition numbers can be interpreted as (R. Baayen (2008), p. 200, citing Belsley & Welsch (1980)):

CN < 6: “no collinearity”

CN < 15: “acceptable collinearity”

CN > 30: “potential harmful collinearity”

3.4.9.3 Is collinearity a problem?

There are two philosophies here.

The first is represented by Chatterjee & Hadi (2012) and R. Baayen (2008): (high) collinearity is a problem—it causes unstable coefficient estimates, increases Type II error, and can slow down or foil model fitting. Therefore, it should be somehow dealt with, for example by changing the predictors included in the model (“residualizing” or dimensionality reduction strategies such as “principal components”).

The second is represented by Gelman & Hill (2007) and R. Levy (2012): collinearity is not a problem—the issues above reflect a lack of information in your data, and how hard it is to detect effects of these variables based on this dataset. Therefore, you should either fit the model with your original predictors (which acknowledges the lack of information), or collect more data.

While we think there is real merit to the second view, there are certainly (very common) circumstances in which it is appropriate to take steps to decrease collinearity. Most common: centering all predictor variables decreases collinearity, without any loss of information. “Residualizing” one predictor on another is generally not a good idea, because it complicates the interpretation of the regression coefficients in unintended ways (Wurm & Fisicaro, 2014).

3.4.10 Assumption 6: Observations

Two linear regression assumptions about observations are that they are:

Equally reliable

Roughly equally influential

The first assumption is hard to check in practice, and we won’t consider it further. The second assumption is important: observations should have “a roughly equal role in determining regression results and in influencing conclusions” (Chatterjee & Hadi (2012), p. 88). This is because our goal in statistical analysis is usually to make inferences about population values of parameters (like the slope of a line of best fit), abstracting away from our finite sample. If certain observations are much more influential than others, they skew the regression results to reflect not the population, but the particular sample we happened to draw.

For example, consider the relationship between raw and log-transformed word frequency, and naming latency, in the english dataset. (The same data as in this example above, but now fitting a simple line of best fit in each case.)

When raw frequency is used as the predictor (left plot), the handful of “extreme” observations with frequency above 25000 have a much greater effect on the slope of the line than other observations, resulting in larger confidence intervals.

Often, a non-normally distributed response or predictors leads to some points influencing the model much more than others, and the problem can be fixed by transforming to normality—as for the word frequency example (right plot). After log-transforming frequency, removing the seven most extreme X values hardly affects the fitted line.

The presence of highly-influential observations can lead to either Type I or Type II errors: the influential observations might be responsible for a spurious result, or they might obscure a pattern that would be clear if the influential observations were excluded.

This isn’t always the case, though—often, some points are inherently more influential than others (say, a couple participants with behavior very different from others), and we need to decide how to proceed in fitting and interpreting the model.

3.4.11 Measuring influence

Cook’s distance is a metric of how influential an observation is in a given model, defined as the product of two terms:

The (squared) standardized residual of the observation

A measure of the leverage of this observation—how much it affects the fitted values (\(\hat{y}_i\))

Cook’s distance (CD) is roughly how much the regression coefficients change when the model is fitted with all data except this observation. Data points with significantly higher CD than other points are highly influential, and should be flagged and examined as potential outliers.

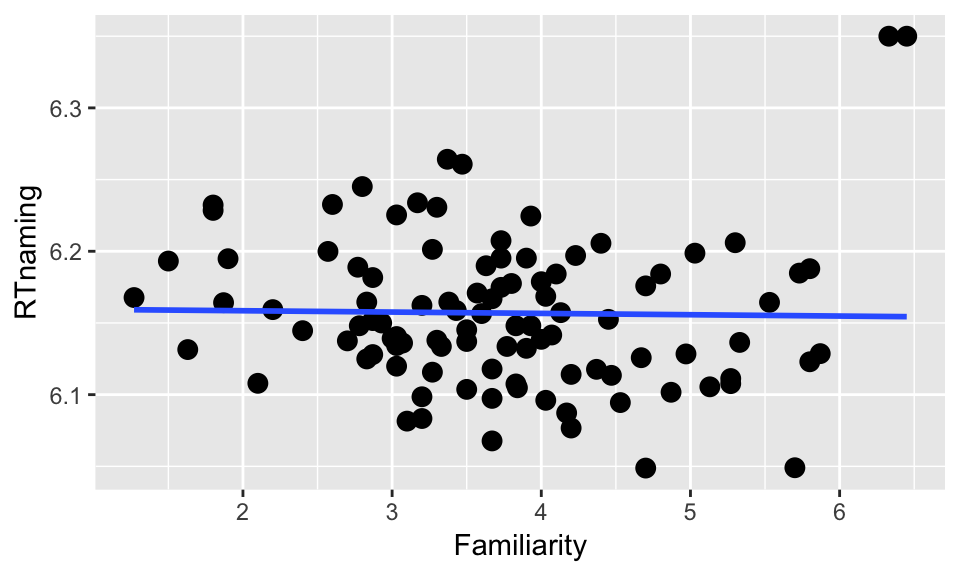

Example

Let’s take a sample of the young-speaker subset of english and tweak it, to get an intuition of what it means for observations to have high influence.

set.seed(2903)

young_subset <- filter(english, AgeSubject=="young") %>%

sample_n(100)

# Tweak data to illustrate point

young_subset[young_subset$Familiarity>6,]$RTnaming <- 6.35

ggplot(young_subset, aes(Familiarity, RTnaming)) +

geom_point(size=3) +

geom_smooth(method="lm", se=F)

Let’s check our intuition by coloring the points according to their CD values:Questions:

- Which points do you think have high influence on the model relating

FamiliarityandRTnaming?

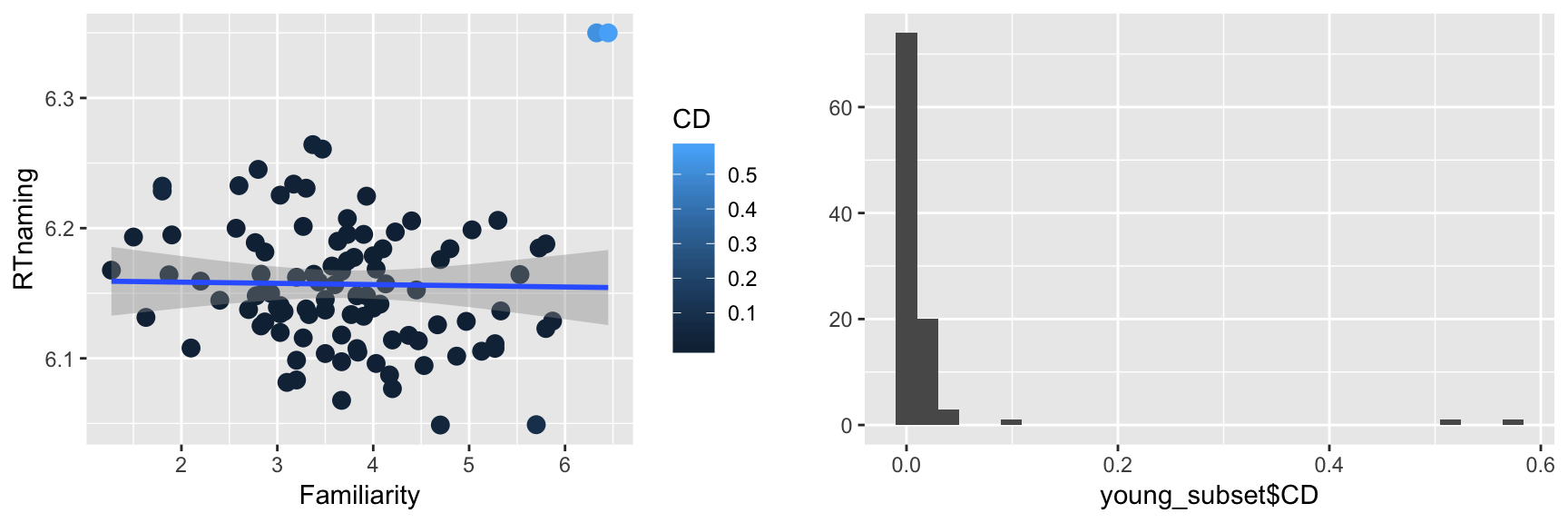

m <- lm(RTnaming ~ Familiarity, young_subset)

young_subset$CD <- cooks.distance(m)

p3 <- ggplot(young_subset, aes(Familiarity, RTnaming)) +

geom_point(size=3, aes(color=CD)) +

geom_smooth(method="lm")

p4 <- qplot(young_subset$CD)

grid.arrange(p3, p4, ncol = 2)

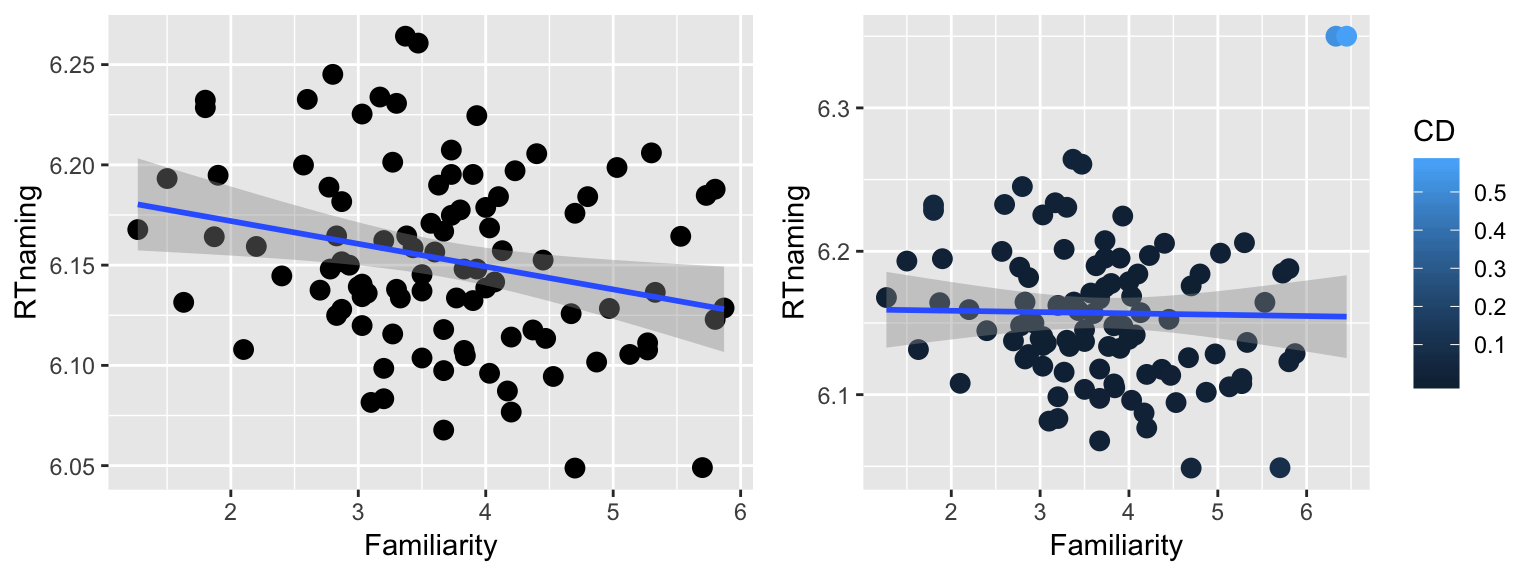

Indeed, two points are outliers in terms of Cook’s distance. We expect to get a very different regression line by removing these two points (why?):

young_subset2 <- filter(young_subset, CD < 0.4)

p5 <- ggplot(young_subset2, aes(Familiarity, RTnaming)) +

geom_point(size=3) +

geom_smooth(method="lm")

grid.arrange(p5, p3, ncol = 2)

After removal of these two outliers, the regression line has negative slope instead of flat slope.

3.4.12 Outliers

Highly influential points are one example of outliers. It is also possible for points to have extreme values of the predictor or response, and yet not be highly influential. There are different philosophies on how to detect outliers (visual inspection versus numeric measures), and what to do with them. At a minimum:

During data analysis, potential outliers should be flagged, examined, and considered for exclusion.

Note what effect excluding outliers would have on the analysis.

Report how outliers were dealt with, and ideally what effect they have on the analysis, in any write-up.

In many cases inspection of gross outliers will reveal data points that should be clearly excluded, such as a participant who always gave the same response, or data coding errors that cannot be corrected. In other cases the decision is more subjective.

3.4.13 Regression assumptions: Reassurance

It may seem a bit daunting that there are so many ways for a regression model to not be appropriate. It is important to take a step back, and remember: when doing data analysis, you should just satisfy the assumptions of the statistical tool being used as best you can, and then fit and report your model anyway. You should not fit regression models blindly, and it’s important to be aware of the assumptions being made by these models, and their limitations. But the more you learn about regression (or any statistical technique), the easier it is to become paralyzed with fear by everything that could be wrong with your data. Keep in mind the dictum of George Box: “Essentially, all models are wrong, but some are useful.” That is, every model is just that: a model of reality, that is only as useful as the insight it can offer about the questions of interest. Often, minor violations of model assumptions don’t affect the qualitative conclusions you can draw from the model.

3.5 Model comparison

So far in our discussion of regression, we have assumed that the form of the model is given: we already know what predictors will be used, and what terms will be included in the model. In practice, with real data this is usually not clear—it is necessary to choose among several possible models of the same data, that differ in which terms they include. Choosing between models is called variable selection (or model selection). In order to perform variable selection, we need a way to perform model comparison: comparing two or more candidate models to assess which one is “better”, in the sense of how well it fits the data relative to its expressive power (e.g. number of predictors).

We wish to compare models of the same dataset, with:

Same response (\(Y\))

Different sets of predictors (\(X_i\))

Model comparison techniques differ on whether they can compare “nested” models only, or both nested and “non-nested” models.

3.5.1 Nested model comparison

Two models are nested if one is a subset of the other, in terms of the set of predictors included.

For example, these two models of RT (in the english dataset) are nested:

\(M_2\) is called the full or superset model, and \(M_1\) the reduced or subset model.

To compare these two models, we wish to perform a hypothesis test of: \[ H_0 : \beta_2 = 0 \]

More generally, we wish to compare two nested models:

\(M_1\): predictors \(X_1, ..., X_q\)

\(M_2\): predictors \(X_1, ..., X_p\) (where \(q < p\))

By performing this hypothesis test:

\[ H_0~:~\beta_{p + 1} = \beta_{p + 2} = \cdots = \beta_q = 0 \]

We fit both models, and obtain a sum of squared residuals for each: \(RSS_1\), \(RSS_2\), which serves as a measure of how well the model fits the data. The RSS of a regression model can be scaled by \(n-p-1\), where \(p\) is the number of predictors in the model, to give a measure of how well the model fits the data given the sample size and number of predictors: \[ RSS/(n-p-1) \] In addition, the difference in RSS values between two nested models, with \(q\) and \(p\) predictors (\(p>q\)), can be scaled to give a measure of how much RSS has gone down, relative to what’s expected given the additional predictors: \[ (RSS_1 - RSS_2)/(p-q) \]

Thus, this test statistic: \[ F = \frac{(RSS_1 - RSS_2)/(p - q)}{RSS_2 / (n - p - 1)} \] gives a measure of how much the unexplained variance is reduced in the full model, with respect to the reduced model. Intuitively, \(R\) divides how much effect “dropping” the \(q\) extra predictors has on explained variance. Note that \(RSS_2\) will always be smaller than \(RSS_1\), since adding predictors to a model can’t give a worse fit to the data. What our hypothesis test checks is: does the superset model fit the data significantly better than the subset model, given the added complexity?

It turns out that this test statistic follows an \(F\) distribution (under the null hypothesis above) with \(p-q\), \(n-p\) degrees of freedom, written \(F_{p-q,n-p}\). So, we can perform hypothesis testing using an \(F\)-test, which may be familiar if you have seen ANOVAs.

Example

This model comparison addresses the question: does adding AgeSubject and LengthInLetters to m1 significantly reduce its unexplained variance?

m1 <- lm(RTlexdec ~ WrittenFrequency, english)

m2 <- lm(RTlexdec ~ WrittenFrequency + AgeSubject + LengthInLetters, english)

anova(m1, m2)## Analysis of Variance Table

##

## Model 1: RTlexdec ~ WrittenFrequency

## Model 2: RTlexdec ~ WrittenFrequency + AgeSubject + LengthInLetters

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 4566 91.194

## 2 4564 35.004 2 56.19 3663.1 < 2.2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The highly significant \(F\)-test means that adding these two variables does significantly improve the model.

Practical notes:

In experimental literature (at least in linguistics), “model comparison” is often used as shorthand for “nested model comparison via an F test” when comparing two linear regressions, because this is the most common way to do so. We will sometimes use this shorthand as well.

The results of such a hypothesis test are usually reported in parentheses, like “Subject age and the word’s length in letters together affect RT beyond word frequency \((F(2, 4564) = 3663, p<0.0001)\)”.

Questions:

- What are the reduced model and full model in the \(F\) test reported at the bottom of every linear regression’s output?

summary(m2)## ## Call: ## lm(formula = RTlexdec ~ WrittenFrequency + AgeSubject + LengthInLetters, ## data = english) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.34438 -0.06041 -0.00695 0.05241 0.45157 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 6.8293072 0.0079946 854.245 <2e-16 *** ## WrittenFrequency -0.0368919 0.0007045 -52.366 <2e-16 *** ## AgeSubjectyoung -0.2217215 0.0025915 -85.556 <2e-16 *** ## LengthInLetters 0.0038897 0.0015428 2.521 0.0117 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.08758 on 4564 degrees of freedom ## Multiple R-squared: 0.6887, Adjusted R-squared: 0.6885 ## F-statistic: 3366 on 3 and 4564 DF, p-value: < 2.2e-16Hint: fit

m0 <- lm(RTlexdec ~ 1, english)and compare tom2.

3.5.2 Non-nested model comparison

In non-nested model comparison, one model isn’t a subset of the other. For example, the two models RT ~ Frequency and RT ~ Familiarity would be non-nested.

Non-nested models can no longer be compared using sums-of-squares (using an \(F\) statistic). Instead, a very different approach is used: information criteria. Instead of testing the hypothesis that certain model coefficients are zero, information criteria compare models based on two general goals:

Fit the data as well as possible

Have as few predictors as possible (“parsimony”)

Different information criteria measures combine model likelihood (\(L\)) and number of predictors (\(p\); the fewer the better) into a single value, of which the most common are:

- Akaike information criterion (AIC):

\[ AIC = 2p - 2 \log(L)\]

- Bayesian information criterion (BIC)

\[ BIC = p\log(n) - 2 \log(L) \]

To apply either criterion, you calculate its value for each model in a set of candidate models, and pick the model with the lowest value.

Note that information criteria can be used to compare models regardless of whether they are nested or non-nested.

Example

m1 <- lm(RTlexdec ~ WrittenFrequency, english)

m2 <- lm(RTlexdec ~ WrittenFrequency + AgeSubject, english)

m3 <- lm(RTlexdec ~ WrittenFrequency + AgeSubject + LengthInLetters, english)

m4 <- lm(RTlexdec ~ WrittenFrequency + LengthInLetters, english)Using AIC and BIC to compare the models:

AIC(m1, m2, m3, m4)## df AIC

## m1 3 -4908.994

## m2 4 -9274.590

## m3 5 -9278.948

## m4 4 -4909.437BIC(m1, m2, m3, m4)## df BIC

## m1 3 -4889.713

## m2 4 -9248.883

## m3 5 -9246.814

## m4 4 -4883.729By AIC, we would choose m3, while by BIC we would choose m2. This illustrates a couple of points:

Different model selection criteria don’t necessarily give the same answer.

BIC tends to choose more parsimonious models than AIC.

3.5.3 Variable selection

Now that we have seen some methods to compare models with different sets of predictors, we can turn to variable selection: how to decide what predictors to keep in a given model.

There is no single best way to do variable selection, out of context—each method has pros and cons, and what method to use depends on the goals of your study. Your goal could be:

Prediction (estimating \(Y\) for unseen data as accurately as possible)

Explanation (choosing the right model from one of several possible pre-specified choices)

Exploratory study

In #1 and #2, our higher-level goal is generalization about “the world” (unseen data). In #3, we are interested primarily in the data we have, and don’t claim that our results generalize to the world. We’ll assume going forward that our goal is #1 or #2—many studies in language sciences are in fact exploratory, but at present this isn’t a goal that leads to publication.

It is important to know that different variable selection methods result in different final models, appropriate for different goals, because most papers that report variable selection simply say what they did, without justification. It is up to the reader to think, what might the final model be if a different variable selection method were used?

There are three components of any variable selection method:

How models are compared (e.g. \(F\) test on nested models, AIC)

How the quality of a single model is evaluated

How it’s decided which of a set of models to choose, based on #1 and #2.

We’ll consider a couple methods here, and cover others in later chapters.

3.5.3.1 Method 1: Nested model comparison

As noted above, “model comparison” is often used as shorthand for “comparison of nested models using F tests”, when referring to linear regressions.

Exercise

Which model of m1, m2, m3 (from the example above) would you choose based on nested model comparison (\(F\) test)?

Note: anova(x,y,z) can be used as shorthand when x, y, z are nested models, and so on (anova(w,x,y,z)…).

3.5.3.2 Method 2: Stepwise variable selection

A common type of variable selection uses “choose automatically” for Component 3 above (how do we decide which model to prefer). In stepwise variable selection, we decide whether to add or drop terms based on any model comparison procedure (Component 1): AIC, BIC, \(F\) test with \(p<0.05\), etc.

There are various flavors of stepwise model selection, such as forwards, where you start with an empty model (intercept only) and add terms, and backwards, where you start with a full model and drop terms.

Example: Stepwise backwards selection using AIC

This is what the step function in R does by default.

Start with the complete regression model (all possible predictors) and obtain AIC

At each “step” we try all possible ways of removing one of the predictors, and whichever yields the lowest AIC value is kept.

Repeat steps 1-2 until we end up with a model with lower AIC value than any of the other possible models that you could produce by deleting one of its predictors.

Stepwise model selection is very popular, probably because it is easy to do using statistical software. It can be helpful to select among a huge set of predictors as a first pass, but has serious drawbacks.

Exercise: 25 potential predictors

Fit the same model with 25 potential predictors to two random halves of the english data, then perform stepwise backwards selection:

## split the English data in half, randomly:

set.seed(2903)

english.1 <- english %>% sample_n(round(nrow(english)/2))

english.2 <- english[!(row.names(english) %in% row.names(english.1)),]

## fit full model to each half-dataset

m1 <- lm(RTlexdec ~ (WrittenFrequency + Familiarity + AgeSubject + LengthInLetters + FamilySize)^3, english.1)

m2 <- lm(RTlexdec ~ (WrittenFrequency + Familiarity + AgeSubject + LengthInLetters + FamilySize)^3, english.2)

## trace=0 suppresses ouput

m1.stepped <- step(m1, trace=0)

m2.stepped <- step(m2, trace=0)

## the two resulting models

summary(m1.stepped)##

## Call: